Here's a left-side-of-the-bell-curve way to do the Internet Archive "right":

- Create browser extension

- User loads page

- User clicks "archive" button

- Whatever is in user's browser gets signed & published to relays

- Archival event contains URL, timestamp, etc.

- Do OpenTimestamps attestation via NIP-03

- ???

- Profit

I'm sure there's a 100 details I'm glossing over but because this is user-driven and does all the archiving "on the edge" it would just work, not only in theory but very much so in practice.

The reason why the Internet Archive can be blocked is because it is a central thing, and if users do an archival request they don't do the archiving themselves, they send the request to a central server that does the archiving. And that central server can be blocked.

Login to reply

Replies (101)

Here's a left-side-of-the-bell-curve way to do the Internet Archive "right":

- Create browser extension

- User loads page

- User clicks "archive" button

- Whatever is in user's browser gets signed & published to relays

- Archival event contains URL, timestamp, etc.

- Do OpenTimestamps attestation via NIP-03

- ???

- Profit

I'm sure there's a 100 details I'm glossing over but because this is user-driven and does all the archiving "on the edge" it would just work, not only in theory but very much so in practice.

The reason why the Internet Archive can be blocked is because it is a central thing, and if users do an archival request they don't do the archiving themselves, they send the request to a central server that does the archiving. And that central server can be blocked.

View quoted note →

I like the sound of this. 🫡🏴☠️

@Gigi You talking about using the #blockchain for this?

Yes, it needs to be stored decentralized. Maybe torrents can also be used in a way to make it more resilient?

@bodhi The People’s Record hashes to the bitcoin blockchain?

Correct!

Tlsnotary style proofs are kind of needed imo.

GitChain :: Internet Archive Repository

Maybe a way to zap most active archivers

- Publish browser extension to zap store

I'm not saying this isn't an issue with Internet Archive, but would it be easy to spoof page contents?

If so, it would be neat to allow other npubs to sign and verify content is accurate

Just need to nostrify (add social login) to this

Webrecorder

ArchiveWeb.page • Webrecorder

Archive websites as you browse with the ArchiveWeb.page Chrome extension or standalone desktop app.

how can you prevent users from tampering the website content and posting a fake snapshot?

I think you could create a pool of "trusted" archivers that take the snapshots on your request for 1 sats or something.

Hmmm we could nostrify  where all the data & assets should be stored on relays and blossom servers.

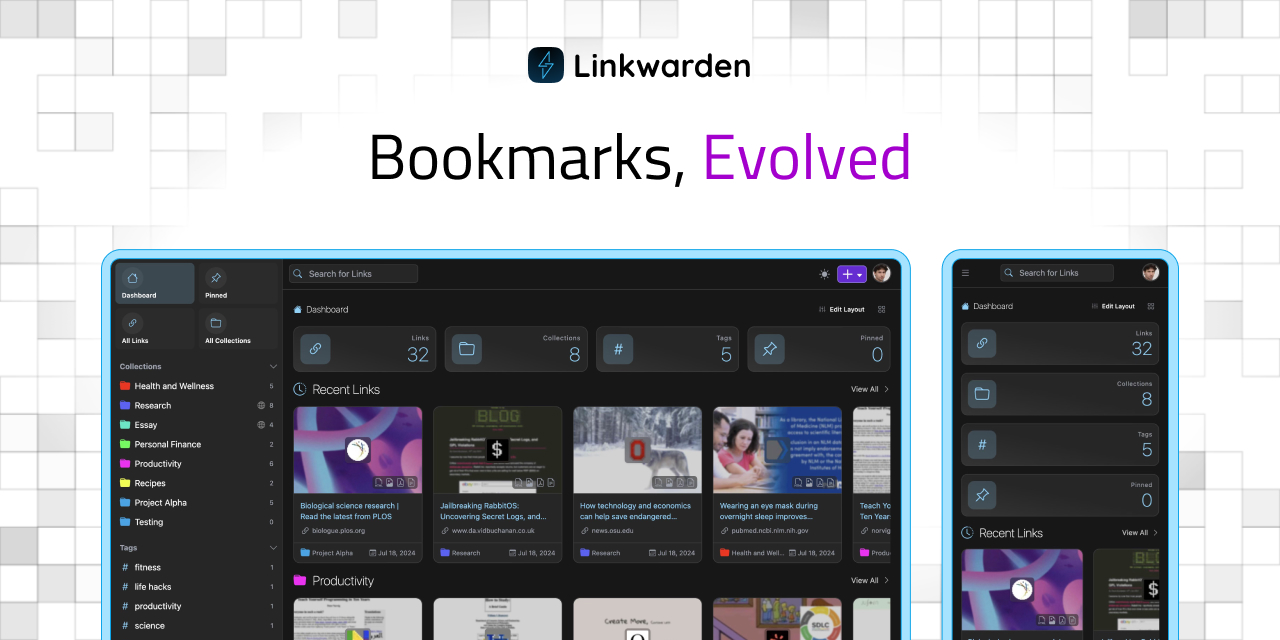

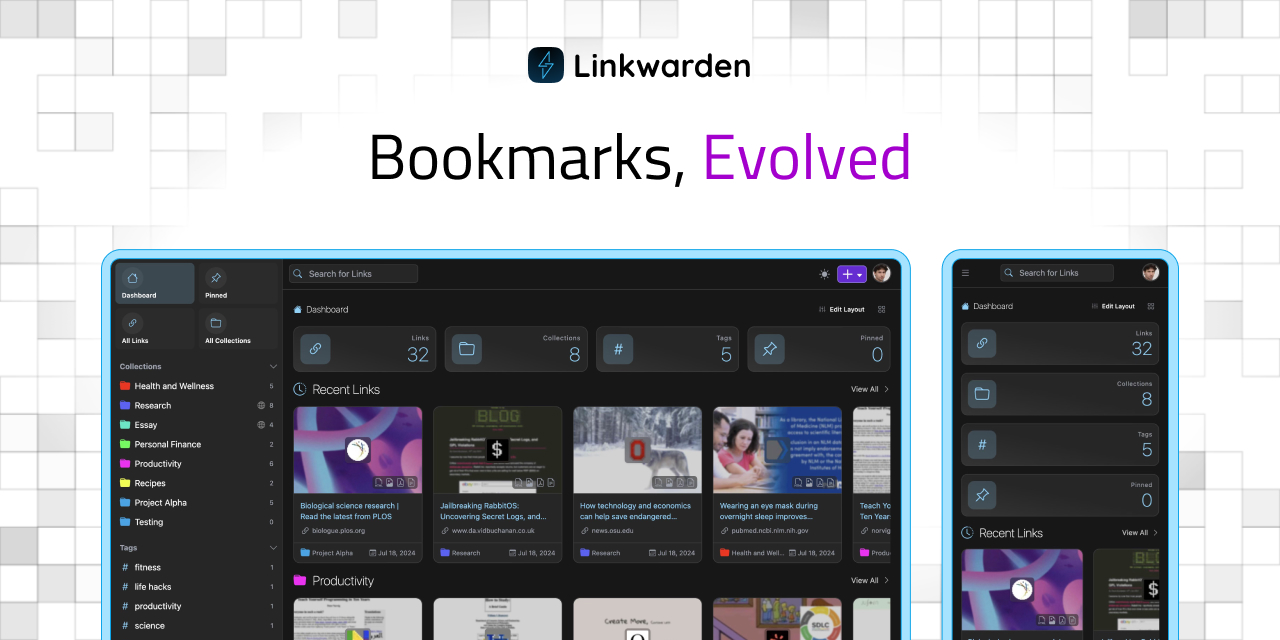

I have used Linkwarden (self-hosted) for a while for my bookmarks.

where all the data & assets should be stored on relays and blossom servers.

I have used Linkwarden (self-hosted) for a while for my bookmarks.

Linkwarden - Bookmarks, Evolved

Linkwarden helps you collect, read, annotate, and fully preserve what matters, all in one place.

Interesting! This extension will archive a complete website?

You can't, you have to trust the archiver, as with anything in Nostr.

Well, now we trust the internet archive don't we?

wasnt p2p yacy search engine doing archival and browsing and spidering stuff in the old days?

I'm sure you're not glossing over any details

academics might disagree but they're dead inside

I fear this would lead to soooo much personal information being doxxed on accident. I would never risk to click such a button.

Maybe we can make sure the extension scans for PID first, but still…

The NIP on attestations that @Nathan Day developed addresses this issue. Originally for proof of place but has been generalized. Ultimately you have to trust the attestor.

User-driven archiving on #Nostr makes history harder to erase and impossible to fake.

We are the archivers now.

Attestations provide WoT for events; in this case web archives.

NostrHub

NostrHub | Discover and Publish NIPs

Explore official NIPs and publish your own custom NIPs on NostrHub.

The issue with user-driven archiving is that not all users have good intentions. One could try and will probably succeed trying to upload malicious "copies" of a website

Sounds interesting.

Anyone complaining things can be faked or spoofed etc.

Yes, yes indeed, everything that is published unhashed and unsigned is fake and gay to begin with. Kill the web, all hail the new web.

Well yes, that's their whole service though. They are used because people trust them. We need to provide at least as much confidence as them.

web archiving is a childs play.

what we want to do is decentralize web crawling data. nobody uses yacy which implicate it failed.

what is the total size of latest common crawl? (estimate)

Compressed size (gzip‑ed WARC) 250‑350 TB

solve this.

We absolutely need this

I would view one person archiving a webpage differently than many people archiving that same webpage.

There's no reason a service(s) couldn't coexist alongside or within a network of individuals. Said services could even recruit contributors from the wider network, or make a service out of "verifying" and storing network events that they deem "accurate", on their own relays. The 2 things would help create accountability for both.

But web pages are often served differently to different visitors. There can be location /personalization variations of exactly the same page. Even to different logged-out, “anonymous” users.

Also, a “page” is more than a single page, there are usually dozens of associated files like css, js, and images. Just saving the html won’t get you very far when you want to look at it again later.

Linkwarden looks great! What is a blossom server?

A would say a single page.

@Marty Bent is the web extension guy now. Get to work.

That is a very integrating suggestion. E.g. the hash of the single archived page should match the hash of the original single page.

?

Preach!

that's why you have multiple sources (archivers).

No and if I ever do please do not hesitate to push me in front of a moving train from a bridge with a flamethrower

Can be done implicitly. If my archived version is the same as your archived version I automatically vouch for your version.

you could normalize some params like browser engine, agent, javascript on/off, timezone, language, etc.

it would probably won't match exactly as you probably have trackers and different scripts

is there an option to just say no without the murder part?

Whenever the HTML that’s rendered in your browser contains some personal information (e.g. an email, your legal name, whatever), it would be included in the archive page and signed by you. If you are not really really careful about what the extension includes in the page, you could leak information that you don’t want to share. The same with stuff that might not even be visible to you.

Imagine a newspaper that has a profile page modal for logged in users. The modal is part of the HTML, but hidden via css until you open it. HTML scrapers would still include all the data that is part of the hidden model, without it ever being visible on the users visit

Interesting idea. Would it be enough to make a screenshot of the website, hash it and timestamp it?

It records a session, so a whole website if you click everything

IMO recording the session is better than trying to crawl the whole site since it captures exactly what you interested in and doesn't get confused by the way websites are built nowadays

Oh! That's a great point. One of the aforementioned 100 things I didn't think about. Solvable issue, but still an issue.

But at that point, that service needs to render and download the page. Then they need to compare it to the page downloaded by the user. You can't use a comparison operator or anything because on most pages, it will vary based on the user and device. You would need manual review and judgement calls by the reviewer which will have to check word by word to see if anything is removed or added, then for display they will have to decide if differences in presentation are valid or not.

Basically, checking a page uploaded by another user is far more laborious and complex than just capturing it yourself.

The far more likely scenario if this is implemented is, there will not be a separate service verifying pages uploaded by global users, but those services will be the ones capturing and uploading the sites. So the user will still just tell these guys a link for them to screenshot, but there will be multiple competing services that the user can choose from, and they will all be interoperable from multiple clients.

While any npub can post a capture, all the clients will curate the captures based on the trustability of the npub and your captures won't be used for anything but curious people looking up shit for reference. Most clients will work on a fully whitelist basis, only showing captures from a few selected npubs, banning them at the first sign of forgery.

This is still better than the internet archive, but you will never see crowdsourced web captures that are worth shit to anyone.

DECENTRALIZE EVERYTHING.

View quoted note →

This is an urgent case as we live in the last days of "truth". Everything is being manipulated and erased in REAL TIME. Decentralized Internet Archive!

Love the idea. LFG!

There really need to be swift vigilantiesque public hangings and pikings for the destruction of knowledge. The total control of access to knowledge is on the same demonic wish list as total surveillance.

Ars Technica

Anthropic destroyed millions of print books to build its AI models

Company hired Google's book-scanning chief to cut up and digitize "all the books in the world."

I’m very left side. Can you just get a bot to auto archive everything

That's like the Playwright snapshots.

Consider using kind 31. We put some thought into the tags, to meet academic citation standards.

{

"kind": 31,

"pubkey": "<citation-writer-pubkey>",

"tags": [

// mandatory tags

["u", "<URL where citation was accessed>"]

["accessed_on", "<date-time in ISO 8601 format>"],

["title", "<title to display for citation>"],

["author", "<author to display for citation>"],

// additional, optional tags

["published_on", "<date-time in ISO 8601 format>"],

["published_by", "<who published the citation>"],

["version", "<version or edition of the publication>"],

["location", "<where was it written or published>"],

["g", "<geohash of the precise location>"],

["open_timestamp", "<`e` tag of kind 1040 event>"],

["summary", "<short explanation of which topics the citation covers>"],

],

"content": "<text cited>"

}

That's very much right-side-of-the-bell-curve from the looks of it.

A place where media presented on nostr protocol are stored, compressed to be opened by any client. When you upload a video or image via nistr app you can choose a blossom server that stores it for you. It cannbe one that you run by yourself forever, this way you own your data. You don't want to store everything on the relays for many reasons...

If we wanna use something existing as the base and add some nostr magic to it I'd probably go with something like ArchiveBox

GitHub

GitHub - ArchiveBox/ArchiveBox: 🗃 Open source self-hosted web archiving. Takes URLs/browser history/bookmarks/Pocket/Pinboard/etc., saves HTML, JS, PDFs, media, and more...

🗃 Open source self-hosted web archiving. Takes URLs/browser history/bookmarks/Pocket/Pinboard/etc., saves HTML, JS, PDFs, media, and more... - A...

Yuge. Immediate fork, with upstream nostr PR would be 🔥

This is why we have the web of trust

Or better yet:  - very simple, and is a browser extension already.

- very simple, and is a browser extension already.

GitHub

GitHub - gildas-lormeau/SingleFile: Web Extension for saving a faithful copy of a complete web page in a single HTML file

Web Extension for saving a faithful copy of a complete web page in a single HTML file - gildas-lormeau/SingleFile

@fiatjaf @Terry Yiu can y’all combine with nostr browser extension?

Push output HTML file to blossom & create archive event accordingly.

SingleFile can even be set up to push things to an arbitrary API. So it should be possible to jerryrig this quite quickly with some spit and some duct tape.

@hzrd149 does blossom have some micro-payment mechanism to allow payment for server costs of hosting a webpage?

Maybe per file/webpage?

Very interesting. Thank you. Do you have a good link for beginners to run your own relay?

Read about it before. Crazy how digital archives are targeted too...but this here is outrageous!

Yeah, I don't want to click links and deal with paywalls, ads, and trackers. Instead I would like to see a long form note containing the archived page. Notes that contain archived pages can also benefit from disqus-style comments.

#YESTR

View quoted note →

"Whatever is in user's browser gets signed & published to relays ".

This is the problem. For paywalled content, how can we be sure that there is no beacon stored somewhere in the page (DOM, js, html) that identifies the subscriber?

duct tape is stupid short sighted, you need a decent standard

true

we need this

@utxo the webmaster 🧑💻

It depends on the server. Some servers can require the user to be subscribed. But there is also an option to pay-per-request. I built an example server using it

GitHub

GitHub - hzrd149/blob-drop: A blossom server that only stores blobs for a day

A blossom server that only stores blobs for a day. Contribute to hzrd149/blob-drop development by creating an account on GitHub.

I hate to bring the bad news but, without some added mechanism, this would only work for truly static pages (i.e. those where the same .html file is served over and over again) whereas most of the content served is tainted with server generated “fluffy”, which could range from some ads on the sidebar to the very text of an article being changed.

My point is: even if we both visit the same “page” at the same time, it’s more likely than not that we’ll get different versions served, even if they differ only by a few lines of code and would look identical as far as the actual content is concerned.

Let's focus on regular content and cross that bridge if we get there.

The main issue is that the big services are centralized and the self-hosted stuff isn't syndicated.

are paywalling services doing that - and punishing the user for screenshotting etc?

hahahahahahahshahahaha

that’s so crazy if so. wow

They're trying everything in their power to make water not wet.

i would have for sure read that years ago, but a great reminder ty gigi

i knew they try were trying to use this on music but i didnt realise they were embedding gotcha code so they can police how the user uses their computer, and heaven forbid, copies something. fucking hilarious.

hard to imagine why they’re dying such a quick death 😂

they’re suiciding themselves. making their product shit all because they cant come to terms with the characteristics of water.

i guess we should thank them

talk about failing the ego test

How that’s Archive do it?

I don't think the goal has ever been to make data impossible to copy. The goal is most likely to make copying certain data more difficult. DRM has done that, whether you like it or not. The industry wouldn't do it if it didn't work to some degree.

But I also hate DRM and how it works. Totally agree on that.

Futile action by a dying industry.

not a dying industry… the players will just change and the rules of engagement will evolve

That’s a good thought . I have an extension I’m working on that bridges the web over to nostr allowing users to create discussions anywhere on the web using nostr. It seems like an archive function would be a solid addition. If I can get the universal grill box idea solid I will work on the archival concept as well.

It’s signed by the user and the reputation becomes king.

You can’t but reputation of the archivist will come into play, you could also have multiple archives and ultimately there would be a consensus.

Linkwarden has one big problem with accepting cookies dialogue. Your PDF will likely contain only that fcking popup.

All the JavaScript getting ingested? Worried about the privacy part but very interesting.

Calling all vibe coders!

View quoted note →

I've been casually vibe coding this since Wednesday. I think it's quite a powerful idea. I have zero experience with making an extension, but it's the first time AI called a project 'seriously impressive' when I threw Gigi's idea in there.

So far I have come up with a few additional features but the spec would be this at a minimum:

OTS via NIP-03

Blossom for media

3 different types of archiving modes:

Forensic Mode: Clean server fetch, zero browser involvement = no tampering

Verified Mode: Dual capture (server + local) + automatic comparison = manipulation detection

Personal Mode: Exact browser view including logged-in content = your evidence

Still debugging Blossom integration and NIP-07 for signing extensions seems tricky. The only caveat is you would need a proxy to run verified + forensic modes, as CORS will block requests otherwise. Not sure how that would be handled other than hosting a proxy. Once I have a somewhat working version I may just throw all the source code out there, I dunno.

Some test archives I've done on a burner account using this custom Nostr archive explorer here.

View quoted note →

Some test archives I've done on a burner account using this custom Nostr archive explorer here.

View quoted note →

Some test archives I've done on a burner account using this custom Nostr archive explorer here.

Some test archives I've done on a burner account using this custom Nostr archive explorer here.

Nostrie - Nostr Web Archive Explorer

You say this is left-side but there is nothing on the right-side of the curve since what you describe here is already at maximum complexity. And that archiver extension is a mess.

But sure, it's a good idea, so it must be done.

Because you don't use Chromium? The web is a farce, I know, we should replace it.

I made this extension: https://github.com/fiatjaf/nostr-web-archiver/releases/tag/whatever, which is heavily modified from that other one.

Damn, this "Lit" framework for making webgarbage is truly horrible, and this codebase is a mess worse than mine, but I'm glad they have the dirty parts of actually archiving the pages working pretty well.

Then there is for browsing archives from others.

Please someone test this. If I have to test it again myself I'll cry. I must wait some days now to see if Google approves this extension on their store, meanwhile you can install it manually from the link above.

websitestr

👀

Please keep things uploaded into non-Google sites 🙏

Google is sold, Google is finished and should be heavily boycotted for what they're doing to us 🕳️🐇

It works. I'm not sure how to view my own, but my Amber log shows what I think is all the right activities.

I'm not sure what the crying is about. This extension is more cooperative than the scrobbler one.

Longest quote on Nostr?! lol

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →

View quoted note →