The combo of 1) people getting sucked into digital echo chambers and 2) people believing hallucinating AI answers without checking, is going to take a lot to change.

We are in an environment where if things *look* official enough, we’ll usually just instantly believe them. Since nobody has the time or inclination to check everything.

Like, someone can just tweet a picture of me at a conference, and put a quote next to it, and tons of people will take it at face value. People won’t stop and ask “is this actually a quote of hers from this conference?” It could be years ago, out of context, or not said by me at all, but one would never know since it seemed legit enough.

The current counter to this is basically to assume most things are potentially wrong in part or in full, unless further verified. But the risk there is people get detached and don’t bother researching things.

One thing you can do is go through your follow list and remove people/entities who don’t have a high signal ratio. In other words, keep people you agree or disagree with that are locked in and high signal, but remove those who parrot things they don’t understand or spread misinformation on a regular basis.

In an environment of endless quantity, it is more important than ever to elevate quality.

Login to reply

Replies (67)

I could not agree more.

I’ve made sure to carefully curate my feed for this reason. It’s self-sovereignty over algo 🫡

“In an environment of endless quantity, it is more important than ever to elevate quality.”

🎯

View quoted note →

Wholeheartedly agree

Just (I should say try...) apply Trivium.

I guess it's akin to "first priciples"

I guess it's akin to "first priciples"

Trivium - Wikipedia

People are will have to research or pay the price

How do you calculate that Signal Ratio? And when is it High?

Is it when they post, comment, receive zaps?

Can that be calculated on Nostr?

And it is also true for mainstream media and anyway news come to us.

Web of trust is coming, it is the next huge challenge.

And we would need to be aware to keep privacy a high concern too.

Your appetite for truth is remarkable like any Nostrich here. Yeah we can totally do that in an AI. Like bringing highest signal and building something with most beneficial knowledge in it.

truth resonates differently

it’s possible to train our ears, so i have hope the necessity will drive adaptation.

but your absolutely right, it’s going to be a challenge

I agree with your description and I think it's quite accurate.

I do believe, however, that this dynamic would be much less of a problem if strangers and public opinion didn't have violently enforcable power over you.

If people's false beliefs and misguided opinions didn't result in laws that made my life unbearable, I wouldn't particularly mind them holding said opinions and beliefs.

I completely agree with you, we should unfollow Peter Todd who just parrots U.S. bank cartel talking points.

This is the Way.

The combo of 1) people getting sucked into digital echo chambers and 2) people believing hallucinating AI answers without checking, is going to take a lot to change.

We are in an environment where if things *look* official enough, we’ll usually just instantly believe them. Since nobody has the time or inclination to check everything.

Like, someone can just tweet a picture of me at a conference, and put a quote next to it, and tons of people will take it at face value. People won’t stop and ask “is this actually a quote of hers from this conference?” It could be years ago, out of context, or not said by me at all, but one would never know since it seemed legit enough.

The current counter to this is basically to assume most things are potentially wrong in part or in full, unless further verified. But the risk there is people get detached and don’t bother researching things.

One thing you can do is go through your follow list and remove people/entities who don’t have a high signal ratio. In other words, keep people you agree or disagree with that are locked in and high signal, but remove those who parrot things they don’t understand or spread misinformation on a regular basis.

In an environment of endless quantity, it is more important than ever to elevate quality.

View quoted note →

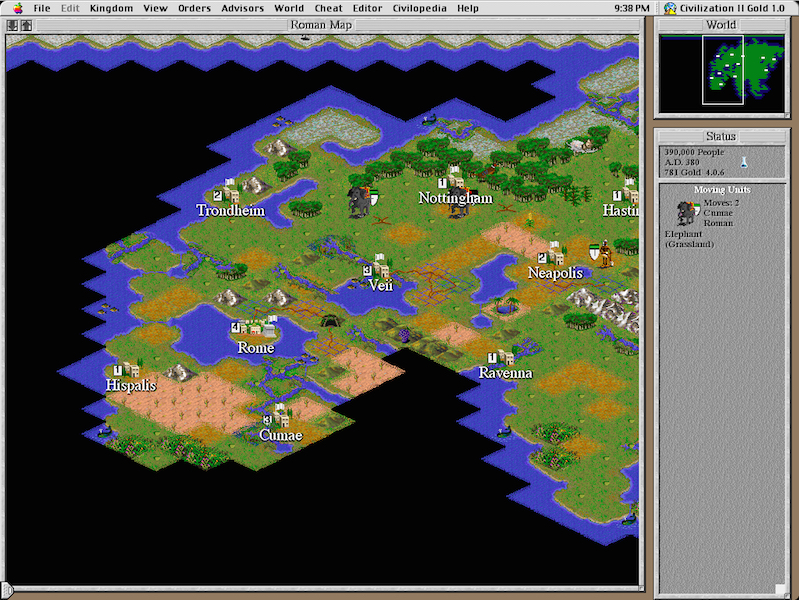

Digitally signed messages help filter the signal from the noise. I can't believe how many Nostriches I spot in Vegas just by looking at them.

People wearing suits, badges, bitcoin flare, etc. are a different faction I've never seen before. YouTube BTCMaxis ir something. I bet most of them have never heard of A Cypherpunks Manifesto published in 1993.

The selection is a very complicated process. Has been made for years in many groups and societies. It takes time because people uncover themselves only when facing some issues, when they cannot hide anymore.

This 👇🏻

We live in a WORLD of SIMULATION

ᴿᵉᵃˡⁱᵗʸ ⁱˢ ʰⁱᵈᵈᵉⁿ ⁱⁿ ˡᵃʸᵉʳˢ

🌎👺

1. Emulation

2. Modeling

3. Imitation

4. Representation

5. Pretense

6. Reproduction

7. Virtualization

8. Simulacrum

9. Alteration

10. Control

11. Influence

12. Handling

13. Distortion

14. Falsification

15. Intervention

16. Deception

View quoted note →

usually the clearest sign is when someone talks in a very in-group vs. out-group kind of way. Like the self-other thing. Like some other group is inferior to ‘us’ and our group.

Feed curing is the way

I think it was Plato that said that we should look for the truth the good and the beautiful in what we choose.

In Bitcoin we found them.

“In an environment of endless quantity, it is more important than ever to elevate quality”

Inflation of information is entropy for the mind. The nonce hard to find in a growing sea of entropy.

All information of value will be pegged to proof of work. In order to do this, we actually need to understand what bitcoin is, because there is no second best.

Going to rebrand as high signal shitposter

I think you just need to hit the unfollow button when you spot some bullshit.

this made me chuckle. But of course, it’s also the comprehensively imagined details that makes them loved by fans. Like chocolate frogs that jump around. In a situation where they are being sold on a train … and one boy with a large family has no money to buy any, and another boy who lost his parents, but they left him financially secure, so he buys some to share with no sense of superiority… he’s just happy to have a nice friend.

digital reputation and webs of trust is the answer

yes, it wont be perfect, but its the best path

Perhaps an AI fact checker extension on NOSTR could be built that provides linkable proof or a reliability score for posts. Couple that with our personalized web of trust. . .

Give the user the power to filter posts as they chose

This totally fucked with my head lol

identify people who parrot things they don't understand is not that easy, there are risk you just remove those you don't like

Me and my frens on quality shitposts!

Most people don't understand things and don't want to, they just want confirmation of their emotions.

Maximalism?

Soon only bitcoin will be trusted online

Who would do such a thing???

But there was some Web of Trust thing they were doing... So maybe the combined effort of follows / unfollows can streamline things too.

but remove those who parrot things they don’t understand or spread misinformation on a regular basis.

View quoted note →

😂

I can get behind this.

View quoted note →

💯

Number 2 is interesting.

You can ask AI a question, and get a generic answer.

If you challenge the answer with a alternate view, it will say "you're right, blah blah blah"

Maybe not all instances, but too many people are starting to see it as a higher truth while also recognising Google can present wrong info.

I sometimes think, "if I had the ability to create a perfect environment for leaning - like a Matrix, where all variables are controlled to get a learning response, where students graduate by becoming attentive critical thinkers, who ask the right questions and aren't easily fooled..."

And then I realize that it would just be this world. It would be identical.

Maybe - I'm not saying it is, but maybe - the reason most people are easily fooled is because in a greater frame, there's a selection bias going on. The ones who graduated aren't in the classroom anymore. And maybe all the Stupid, the clown world stuff, is one of those controlled variables that optimize our learning. Maybe.

The combo of 1) people getting sucked into digital echo chambers and 2) people believing hallucinating AI answers without checking, is going to take a lot to change.

We are in an environment where if things *look* official enough, we’ll usually just instantly believe them. Since nobody has the time or inclination to check everything.

Like, someone can just tweet a picture of me at a conference, and put a quote next to it, and tons of people will take it at face value. People won’t stop and ask “is this actually a quote of hers from this conference?” It could be years ago, out of context, or not said by me at all, but one would never know since it seemed legit enough.

The current counter to this is basically to assume most things are potentially wrong in part or in full, unless further verified. But the risk there is people get detached and don’t bother researching things.

One thing you can do is go through your follow list and remove people/entities who don’t have a high signal ratio. In other words, keep people you agree or disagree with that are locked in and high signal, but remove those who parrot things they don’t understand or spread misinformation on a regular basis.

In an environment of endless quantity, it is more important than ever to elevate quality.

View quoted note →

Legacy media have been doing this forever though… “finessing” the narrative

This was always a problem. Digital media efficiencies are exacerbating it. For the best, I would argue.

There are those who will learn to verify critical information for lack of source reliability. Likely, more than there were in the 20th century when there were fewer media outlets, and propaganda sources were more centralized.

In short, people are retarded.

Going to do this. No hard feelings folks. @Lyn Alden is right.

View quoted note →

We’ve been creating various forms of satirical content crafted to seem “official” or like a legitimate advertisement for the past two years (most of it incorporating AI). Low effort AI slop can fool the majority of people. Done well, you can fool 99% of people. Even if you’re deep in trenches with this stuff, you can be fooled by it. So far the only solution we’ve found effective is listening to 40 hours of Bitcoin podcasts, per week. No cap 🧢

Trust (like respect) should be earned, not given ab initio. In the meantime, be unswayed or verify.

Funny you post this on Nostr, the echo chamber of echo chambers.

Funny that you’re here.

‘In an environment of endless quantity, it is more important than ever to elevate quality.’

Another Alden maxim for the ages!

I could not agree more, particularly as I have worked in that area, in various critical fields, for many decades.

This is an exciting time though and it I even feel the temptation to shortcut myself sometimes.

Keep up the important analysis and communication work Lyn and I will continue to do my small part too.

Hence why being on a communication protocol that doesn’t profit from confusion, misdirection, and perpetuating digital echo chambers will be so important. ⚡️

To check the source has with AI just increased in importance. References/sources should always be listed. Therefore, I prefer perplexity instead of chatGPT. However, it is much more interesting to read what you say/write instead of reading what other people tell what you say/write. Pretty simple.

Nostr fixes this?

For example if the real Lyn posted something on Nostr

I know it was her bc it was posted by her npub?

Well said! We will need new tools and new ways of thinking to adapt to this new information landscape

People are like that. They believe and follow authorities. It is their nature and that cannot be changed. Those who think, check and reason are the only ones. We always have to verify information, but even that is not easy. It is always a matter of trust. Who are we going to trust, has he lied to us before, has he been misled by another, etc.

🤣

You have to know this before you can find the liars in Journalism. Who not to trust!

You should always go to the source of the news to verify the truth. It is the basis of information and journalism. You can immediately tell when a news or a post is true or if they need to be verified 🤷♂️

How would we measure 'signal ratio'?

I don't think it's magic, but Nostr does give us a platform to be able to build the tools we need to make it work better. One example would be some kind of review service of the posts from a profile that helps to judge whether what is being posted is more signal than noise. Truth or fiction. If the protocol itself, or tools built within or around the protocol, could help us determine which profiles we follow can be trusted to be posting verified and real content, it would go a long way towards a foundation for information we can trust. By adapting journalism’s principles—accuracy, independence, fairness, transparency, accountability, and public service—this service can filter signal from noise, leveraging tools like fact-checking bots, trust scoring algorithms, and community governance. Grounded in Claude Shannon’s information theory from the 1940's, the service reduces entropy and increases mutual information, ensuring clearer, more reliable communication. Challenges like gaming or subjectivity can be mitigated through reputation systems and transparency, making Nostr a foundation for trusted digital information.

sure, you can find it there. You can find it anywhere! lol, that came out sounding slightly Dr. Seuss-like 🪿

BlockFi

🧐

The combo of 1) people getting sucked into digital echo chambers and 2) people believing hallucinating AI answers without checking, is going to take a lot to change.

We are in an environment where if things *look* official enough, we’ll usually just instantly believe them. Since nobody has the time or inclination to check everything.

Like, someone can just tweet a picture of me at a conference, and put a quote next to it, and tons of people will take it at face value. People won’t stop and ask “is this actually a quote of hers from this conference?” It could be years ago, out of context, or not said by me at all, but one would never know since it seemed legit enough.

The current counter to this is basically to assume most things are potentially wrong in part or in full, unless further verified. But the risk there is people get detached and don’t bother researching things.

One thing you can do is go through your follow list and remove people/entities who don’t have a high signal ratio. In other words, keep people you agree or disagree with that are locked in and high signal, but remove those who parrot things they don’t understand or spread misinformation on a regular basis.

In an environment of endless quantity, it is more important than ever to elevate quality.

View quoted note →