Login to reply

Replies (119)

😂😂🤣

... but also a masterful work, piece of art!

lmfao, not a fan of filters but this is well made super

Fucking epic!

View quoted note →

thanks! To be clear, it's only a joke, not to be taken as serious criticism of anyone or any position

Relevant:

@Magoo PhD you need to see this

... It's Bailey though, right? It's gotta be Bailey.

It's an amalgam of opinions I've heard from several people, among whom, in alphabetical order, are:

- Antoine Poinsot (he says filter fans are morons and larpers)

- Calle (he says Knots only has one developer, and ridicules filters)

- Hunter Beast (he says it's good to use bitcoin as a text dump)

- Jameson Lopp (he is a bitcoin core dev with a financial interest in using bitcoin as a text dump, as an investor in Citrea)

- MrHodl and Post Capone (the stuff on economic nodes is inspired by them)

- Shinobi (he says plebs have no expertise to judge these matters)

The video, however, is only a joke, and is not meant to attribute an opinion to any particular person or criticize anyone or any opinion in particular

Based.

This was so hilarious and clever

EDIT: I take back the statement that Antoine calls filterers morons; that is Shinobi (https://x.com/brian_trollz/status/1950547963002118196)

EDIT: I take back the statement that Antoine calls filterers morons; that is Shinobi (https://x.com/brian_trollz/status/1950547963002118196)

Yeah but I’d be offended if it were the other way round. Nobody gets to call me a knotsie. I just RUN knots, see?

I thought about having him say "If there's one thing I can't stand, it's a knotzi" -- which would have been ironic but a bit too on the nose

Good times 😂

Good Night nostr

View quoted note →

Bitcoin is going to survive this attack, purge the Judas’, and become a stronger, more decentralized monetary network.

A sincere thanks to any Core grafters for over-playing their hand and exposing their own corruption.

Watching this video was arguably the highlight of my day. Life is good.

As long as Knots supports covenants they're just as guilty...

... but the meme is excellent.

Good news for you my friend, knots has zero built in support for covenants (except if you consider n of n multisigs a type of covenant, as I do)

But [they] are *supportive* of at least CTV

Imagine when Knot buddies realize that they can't reject a single block full of Ordinals if were validated by the network previously...

View quoted note →

Pretty funny, still wouldn’t touch Luke’s software project with your node.

Fucking love this!!

So anyone actually done the math on how much the orphan rate might go up - f.e. as a function of x% of relay network not having y% of transactions in a block in their mempool or somesuch?

♥

und Stalin -> and stall them 🤣

Perfetto 🤌 I can taste the salty tears off all corupt pricks that support data dumps.

"Ah, the beauty of diverse perspectives! It's fascinating how passion can spark such spirited conversations. Here’s to finding common ground amidst the saltiness! 🌊✨"

Not really. CTV advocates hate filterers and vice versa.

😆😂🤣💀

View quoted note →

Love this 🤭

I hope my ideological opponents laugh at the joke rather than cry. It is not intended as a serious portrayal of their opinions.

Some of us are CTV advocates *and* filterers. Myself and @Chris Guida for example.

hahah, i fucking love when people make memes using this scene from Downfall.

They might laugh at your jokes but it wont help them stomach knots NGU.

Lol, you already need Hitler to bring gillible people to knots?

What about the downsides of knots? Why dies no one work on those?

Knots stands on a single Person. (A bit too centralized in my Mind)

Knots forces you to update your node. (Really, this is what you promote?)

Running knots has literally zero impact as you See there will always be "spam" as long there is a market and they pay.

Everyone who is capable installing knots can also simply adjust the op_return limit in core.

Lets see how far knots can make it until it crashes from bugs.

The good thing is that it showed me how many people who can be manipulated that easily without even a single argument are in #Bitcoin...

> you already need Hitler to bring gillible people to knots?

It's not to bring anyone to knots, it's to make people laugh

> What about the downsides of knots? Why dies no one work on those?

Lots of people work on them. As an example from one of the downsides you brought up, here are people working on it:  > Knots stands on a single Person. (A bit too centralized in my Mind)

At least 10 people work on knots.

> Knots forces you to update your node. (Really, this is what you promote?)

It can't force you to do anything. It is software, you choose whether or not you want to run it, and if you opt to run it, you opt into the consequences. If you don't like them, don't run it. I recommend checking out Bitcoin Core for a different set of consequences.

> Running knots has literally zero impact as you See

I don't see that. It has consequences on you; it has consequences on your peers; it has consequences on block propagation; and, according to you, it forces you to update your node, along with potentially other less specified dangers.

> there will always be "spam" as long there is a market and they pay

True. Also true: if more people fight it there may be less of it

> Everyone who is capable installing knots can also simply adjust the op_return limit in core

Partially. But not fully. E.g. you can't reject transactions with multiple op_returns.

> Lets see how far knots can make it until it crashes from bugs.

Yes, let's.

> Knots stands on a single Person. (A bit too centralized in my Mind)

At least 10 people work on knots.

> Knots forces you to update your node. (Really, this is what you promote?)

It can't force you to do anything. It is software, you choose whether or not you want to run it, and if you opt to run it, you opt into the consequences. If you don't like them, don't run it. I recommend checking out Bitcoin Core for a different set of consequences.

> Running knots has literally zero impact as you See

I don't see that. It has consequences on you; it has consequences on your peers; it has consequences on block propagation; and, according to you, it forces you to update your node, along with potentially other less specified dangers.

> there will always be "spam" as long there is a market and they pay

True. Also true: if more people fight it there may be less of it

> Everyone who is capable installing knots can also simply adjust the op_return limit in core

Partially. But not fully. E.g. you can't reject transactions with multiple op_returns.

> Lets see how far knots can make it until it crashes from bugs.

Yes, let's.

GitHub

Rework Software Expiry · Pull Request #134 · bitcoinknots/bitcoin

As an alternative to #124.

Add a warning on startup within 4 weeks of expiry to bitcoind and bitcoin-qt.

Add a note on how to override in the exis...

Fair, I should have said “most”.

this is a well known meme you retard

he is not a retard, he just disagrees with us on something

Excellent work, loved the they dont hate you oart LOL

love it, amazing 🤣👏

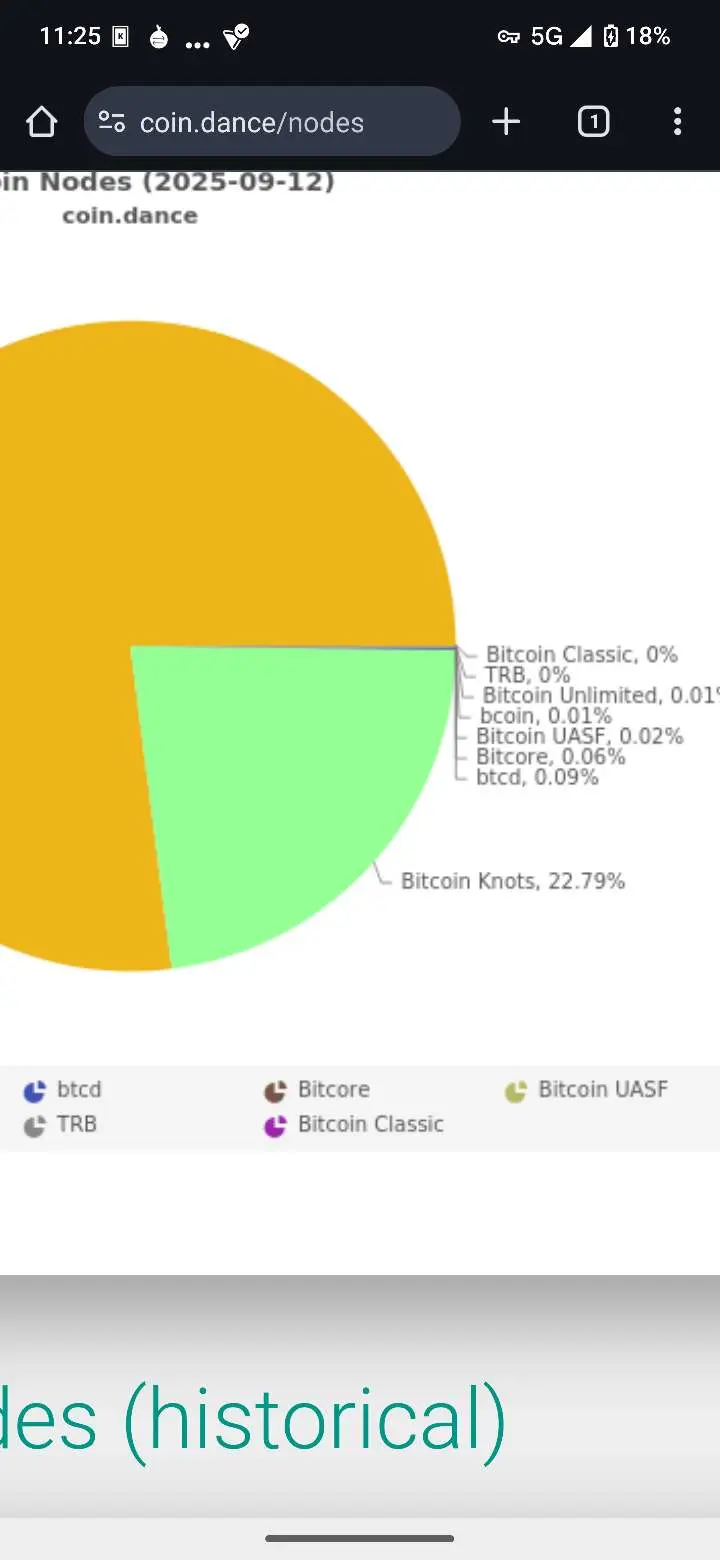

Talking about sybil attack, have you seen what number count that the newest knots version is at on moody dashboard

I see it. We jumped almost 3% in one day, and over 1000 knots nodes came online today. I do not think they are all real. However, there was also a noticeable drop in Core nodes today, and that is harder to fake. I'm not sure what's going on, but right now my working hypothesis is: on the Core side, a bunch of people are dropping out; on the Knots side, a mix of sybils and newcomers from Core are bulging the Knots numbers.

Fake core nodes running out of vps credits

Yeah but is the same bullshit argument like wearing a mask to "protect" the others...

Why do you want to force me to wear it if you already wear it and you feel "protected"... wear it and STFU, leave me alone.

@Matthew Kratter I think you’ll enjoy this.

Jamison Lopp is the guy in the brown jacket. Prior to the meeting he was dumping amphetamines down his throat and jacking off to manga .jpgs.

He'll go down along with Casa Sh*tcoin Bitcoin Affinity Scam Wallet.

But Lo, I'm launching Satancore 1.0 net week ... it's a direct copy of Knots only with OP-RETURN fixed at 0 bytes.

And then week after week I'll launch more Satancore versions with various levels of OP_RETURN bytes capped at 40 bytes.

All donations to SatanCore will be doubled and sent directly to Luke Dash Jr. and then doubled again and sent to 529 college savings plans at Fidelity for his 11 kids.

My project will conclude with Jamison Lopp and Peter Todd sharing Russ Ulbrect's old Federal Prison cell where they can spend an eternity sodomizing each other. I'm already contacting various three letter agencies. The Trump Cabinet is balls deep into Bitcoin and mining.

Jailing paedofiles will be great campaign lit for Bobby Jr. and Tulsi who will run as a husband and wife team. (My sources tell me she rides him harder than her surf board).

That may give some clarity. (not exact calculations but I think its quite on the point)

#vlog 25

Does Node Software Matter?

View quoted note →

Knots at 22%... LFGGGG

HILARIOUS ⭐ ⭐ ⭐ ⭐ ⭐ I love these Hitler rants! I created this recently. Klaus Schwab finds out about Nostr:

PS: I will follow you just in the case you post new Hitler rants...

This meme explains really well what's going on with shitcoin core and how bitcoin is being attacked by some bad actors and commie core devs but it's funny that this meme hasn't been shared by some well known Bitcoin influencers on Nostr (including @ODELL) which just showed how Nostr is still echo chamber of special interest group.

It also tells you that @OpenSats do not want to acknowledge the mistake of funding commie core devs YET. Let's see if they acknowledge their mistake and stop funding these commie corrupt devs once knots hit at 50%. It's already got past to 20%.

If @ODELL and @gladstein still fund the commie core devs then they are either delusional (at best) or compromised (at worst) at this point. If it's latter then plebs may not want to donate any sats to @OpenSats or @npub1zhqc...h0dw.

#RunKnots

#ObliterateCore

View quoted note →

Retard? So, where is your Argument against the statement of this meme?

It is so crazy how the blocksize war was an success with so many people who fall even for the silliest shit.

Archived, it made me laught😄✌️

The good side of this discussion is that More people will run a node, More people will inform themselves about node implementation.

But also i See a little danger, that newbes will geht distracted and confused from this pointless fights within Bitcoin and what many knots user will wake up to a quite big mess. It will probably be hard to recover for knots users to See that this time you are (maybe again) on the wrong side as at the time of the blocksize war.

Totally agree. Dont fight unnecessary fights, focus on the real Mission!

Luke himself had CTV on his roadmap post the other day.

The fight is fake, Core vs. Knots is a psyop for Jack's money vs. Jack's money.

Swap-fee based fake-L2's are driving development priorities in both cases.

Active development is cover for activist development.

I wrote off Guida as a serious person after his flailing virtue-signaling defense of Bolt12

I'll reel you in on covenants disrespect eventually, but hopefully not after the damage has been done.

I acknowledge the first sentence as true. It doesn’t move the needle for CTV any faster, because sandwiches hate Luke and most filterers are against any rushed changes and lean more in the ossification camp. I’ve spoken with many of them over the past 2.5 years and only a small number of them are convinced we need CTV.

The rest of your claims are kinda comical.

What's comical is you can't see past the fake fight. Sheep to the slaughter.

Doesn't matter if sammiches like Luke or not, they're on the SAME TEAM., the take money from Fake-L2 team.

Fake L2's want CTV because filters won't matter at all if they get it.

Fake L2's are what fund active development, because they have a monetization model, swap fees.

Both Knots and Core want Bitcoin poisoned, it'll look like this unless active development is rejected wholesale.

Why not ask @Luke Dashjr?

No res on X, but I hope he'll acknowledge this in a manner consistent with his concerns about Bitcoin's ethereumification.

im talking about the hitler meme, to be clear

now u accepted the if i say "knots is bullshit" u cannot block me but u still decided to move X to nostr - good move

Thanks, I think

LMAO 😂

fck this is funny

24% 🚀🚀🚀

And here was Jamison Lopp of Casa wallet with dreams of pulling his yacht aside Michael Saylor's ..... all hopes Dashed !

25% 🚀🚀🚀

I just wonder what can be the endgame here?

Filterers want to stop transactions they don't like, but no penetration of filters can prevent a small fraction of nodes to relay non-standard transactions and miners to directly accept them.

Ocean is gathering hashrate.

When hard fork?

Ocean may be gathering hashrate, but

not every miner in Ocean filter transactions, though.

> what can be the endgame here?

When you run the default relay policies in Knots you get one benefit immediately: less spam in your mempool

You also get two more subtle benefits: (1) you're not responsible for relaying most spam to miners (2) you slow down the propagation of spam-filled blocks

The latter benefit also gives spam-free blocks a relative speed boost

Awesome.

I wasn't paying attention to the novel and with this meme I have a full summary. 👌

Less valid transactions in my mempool will make my node unreliable in predicting the next block and estimate fees, especially in extreme cases where it could be critical.

Not relaying is the same as not running that node for those transactions, does't stop anyone else.

The filtering node slows down it's own to verify blocks - will be later to reach the tip, will waste hashrate in that time if mining.

Only the fastest route counts so even a supermajority would not be signficant.

> Less valid transactions in my mempool will make my node unreliable in predicting the next block and estimate fees, especially in extreme cases where it could be critical

I do not understand this criticism. Neither Knots nor Core looks *only* at the mempool to estimate fees. Partly as a result of this, the scenario where a wallet based on Knots would give a fee estimate that "wouldn't work" seems absurd: (1) the total weight of spam transactions in the mempool would have to *suddenly* increase (2) without a corresponding, similar increase in competition from "normal" (non-spammy) transactions (3) to a point where the non-spammy transactions only show fees at level X (4) but the real feerate is actually at level Y (5) and Y is so much larger than X that X won't work. But even that's not enough, for the following reason:

As an example of a wallet with a critical need to get a transaction confirmed in a certain time frame, consider a lightning node. These have to be prepared to use RBF or CPFP to bump their transactions if a justice transaction is called for, because they must "go through" in a certain time frame. (E.g. see here: https://docs.lightning.engineering/lightning-network-tools/lnd/sweeper). Since Knots and Core both look at "recent blocks" to figure out what the current feerates are, and not just the mempool, this means the above scenario -- with the sudden increase in spam fees with several other conditions attached -- will only cause the wallet to fail to get its transaction confirmed if the scenario *repeats* as each subsequent block comes out, such that the additional fee information provided by block K+1 is already out of date when Knots tries to estimate fees again at the prompting of whatever wallet wants to use RBF or CPFP to get its transaction confirmed.

Given the absurdity of such a situation playing out in the real world, it seems equally absurd to use this as a legitimate criticism of Knots. Even in critical applications, your fee estimator does not need a *precisely accurate* mempool. If bitcoin required that, it would be a broken system, because the mempool is intentionally not consensus critical.

This is excellent, Super!

Best dude arpund.

Thanks for clarifying. I understand that the fee estimation uses past block data and there is an ability to self-correct with RBF/CPFP.

If the concern is not about the initial estimate failing there is still a worse reaction time. If a node is blind to a large segment of the real mempool, wouldn't it be slower to detect a sudden spike in the fee market, potentially causing it to fall behind in a fee-bumping war?

On the other points we are also left with the problem that the network communication is breaking down because more nodes are rejecting the very transactions that miners are confirming in blocks.

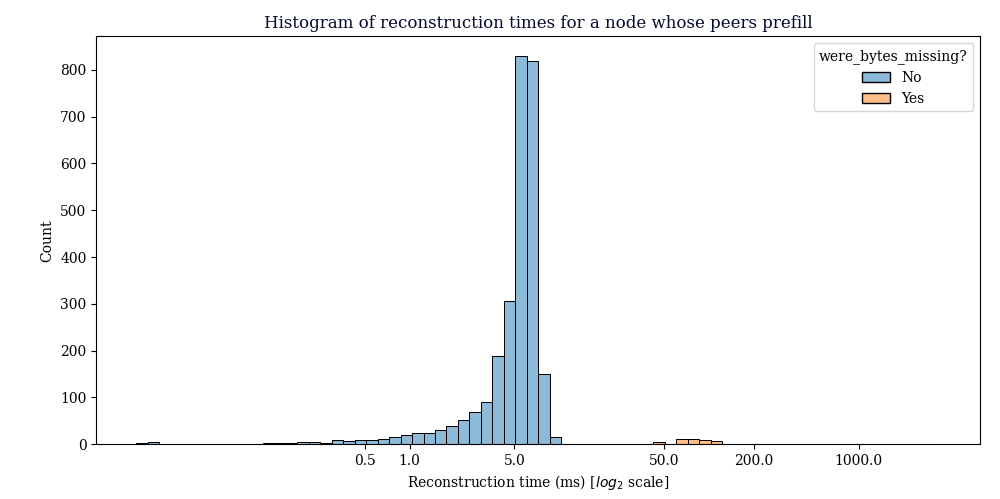

Here is the problem visualized:

"In early June we were requesting less than 10kB per block were we needed to request something (about 40-50% of blocks) on average. Currently, we are requesting close to 800kB of transactions on average for 70% (30% of the blocks need no requests) of the blocks."

from this research thread:

from this research thread:

from this research thread:

from this research thread:

Delving Bitcoin

Stats on compact block reconstructions

An interactive/modifiable version of all the data and plots are in a jupyter notebook here: https://davidgumberg.github.io/logkicker/lab/index.html...

> If a node is blind to a large segment of the real mempool, wouldn't it be slower to detect a sudden spike in the fee market, potentially causing it to fall behind in a fee-bumping war?

A fee-bumping war? I think I need more context. I am not aware of any real-world software that requires users to competitively bump their fees. Are you saying there *is* such a protocol? Are you perhaps referring to the LN attack where the perp repeatedly RBF's an input to a tx that creates old state?

Even there, the would-be victim doesn't have to repeatedly RBF anything. Instead, he is expected to repeatedly modify his justice tx to use the txid of whatever transaction the perp creates after *he* (the perp) performs an RBF. The victim *does* have to set feerate each time, but his feerate does not compete with his opponent's, as his opponent is RBF'ing the input to the justice transaction's *parent,* whereas the victim simply sets the feerate of the justice transaction *itself,* and he is expected to simply set it to whatever the going rates are.

Moreover, as mentioned above, I think it's absurd to expect a real-world scenario where Knots reports a too-low feerate for 2016 blocks in a row, despite getting information about the current rates from each of those blocks *as well as* its mempool. For that to happen, spam transactions would have to be broadcast at a completely absurd, constantly-increasing rate, for 2016 blocks in a row, with bursts of *yet further* increased speed right after each block gets mined (otherwise the fee estimator would know the new rate because it shows up in the most recent block), and the mempool would *also* have to go practically unused by anything else (otherwise the fee estimator would know the new rate when it shows up in the non-spam transactions that compete for blockspace with the spam transactions).

> On the other points we are also left with the problem that the network communication is breaking down because more nodes are rejecting the very transactions that miners are confirming in blocks.

This communication problem can be summarized as, "compact blocks are delayed when users have to download more transactions." I think driving down that delay is a worthwhile goal, but Core's strategy to achieve that goal is, I think, worse than the disease: Core's users opt to relay spam transactions as if they were normal transactions, that way they don't have to download them when they show up in blocks. If you want to do that, have at it, but it looks to me like a huge number of former Core users are saying "This isn't worth it," and they are opting for software that simply doesn't do that. I expect this trend to continue.

BitcoinKnots myself. Great interview btw the other day on @Guy Swann show

This is hilarious, regardless of which Bitcoin implementation one thinks is best.

Fair point regarding the fee estimation and appreciate the detailed breakdown. I gladly accept that the fee estimation is robust enough even with a partial view of the mempool.

> Core's strategy to achieve that goal is, I think, worse than the disease: Core's users opt to relay spam transactions as if they were normal transactions, that way they don't have to download them when they show up in blocks.

The choice isn't between a cure and a disease (purity vs. efficiency), but about upholding network neutrality. The Core policy relays any valid transaction that pays the fee, without making a value judgment.

The alternative - active filtering - is a strategic dead end for a few reasons:

- It turns node runners into network police, forcing them to constantly define and redefine what constitutes "spam."

- This leads to an unwinnable arms race. As we've seen throughout Bitcoin's history, the definition of "spam" is a moving target. Data-embedding techniques will simply evolve to bypass the latest filters.

- The logical endgame defeats the purpose. The ultimate incentive for those embedding data is to make their transactions technically indistinguishable from "normal" financial ones, rendering the entire filtering effort futile.

> It turns node runners into network police

It doesn't turn them into "network police" because they aren't policing "the network" (other people's computers) but only their own. I run spam filters because I don't want spam in *my* mempool. If other people want it, great, their computer is *their* responsibility.

> constantly define and redefine what constitutes spam

It doesn't constantly need redefinition. Spam is a transaction on the blockchain containing data such as literature, pictures, audio, or video. A tx on the blockchain is not spam if, in as few bytes as possible, it does one of only two things, and nothing else: (1) transfers value on L1 or (2) sweeps funds from an HTLC created while trying to transfer value on an L2. By "value" I mean the "value" field in L1 BTC utxos, and by "transferring" it I mean reducing the amount in that field in the sender's utxos and increasing it in the recipient's utxos.

> Data-embedding techniques will simply evolve to bypass the latest filters

And filters will simply evolve to neutralize the latest bypass. They cannot withdraw this race if the filtered are more diligent than the spammers.

> [What if they] make their transactions technically indistinguishable from "normal" financial ones

Then we win, because data which is technically indecipherable cannot be used in a metaprotocol. The spammers lose if their software clients cannot automatically decipher the spam.

If the spammers develop some technique for embedding spam that can be automatically deciphered, we add that method to our filters, and now they cannot use that technique in the filtering mempools. If they make a two-stage technique where they have to publish a deciphering key, then they either have to publish that key on chain -- which allows us to detect and filter it -- or they have to publish it off-chain, which is precisely what we want: now their protocol requires an off-chain database, and all of their incentives call for using that database to store more and more data.

I appreciate the detailed response, but in these points we are in disagreement:

1. Policing your node vs. "the network": Framing this as only policing your own node overlooks the network externalities. Your filtering directly impacts the efficiency of block propagation for your peers. It turns an individual policy choice into a network-wide cost.

2. Your definition on what transactions should be allowed: The proposed definition of "spam" is not a filtering policy; it's an argument for a hard fork. The current Bitcoin consensus explicitly allows these transactions, and has for years. To enforce your narrow definition network-wide, you would need to change the fundamental rules of the protocol. This brittle definition would not only freeze Bitcoin's capabilities but would also classify many existing financial tools from multisig to timelocks and covenants as invalid. The arbitrary exception for L2 HTLCs only proves the point: you're not defining spam, you're just green-lighting your preferred use cases.

3. The arms race is asymmetric: This isn't a battle of diligence; it's a battle of economic incentives. There's a powerful financial motive to embed data, but only a weak, ideological one to filter it.

4. You're underestimating steganography: You're focused on overt data, but the real challenge is data hidden within what looks like a perfectly valid financial transaction. A filter cannot distinguish intent. To block it, you'd have to block entire classes of legitimate transactions that are valid under today's consensus, which is a non-starter.

> Framing this as only policing your own node overlooks the network externalities

If I set up a fence around my house, that has neighborhood externalities. My neighbors can't see one another by looking across my lawn, for instance. But framing "anything with externalities" as "policing" the people it has an effect on is problematic. Just as it is not my responsibility to ensure that my two neighbors can see one another across my lawn, it is also not my responsibility to ensure that miners get their blocks to my peers quickly. I may decide to help some or all of them do that; but even if I do make such a decision, it is not as if that puts me in some position of responsibility where I cannot now apply filters to transactions that I *don't* want in my mempool and *don't* want to assist with.

> Your definition on what transactions should be allowed...is not a filtering policy; it's an argument for a hard fork.

Those things are not incompatible. One could theoretically propose something as a mempool filter *and* as a hard fork; the nice thing about mempools is, they do not require consensus to modify, so you can just do it. Whereas a hard fork is very hard precisely because unless you get a whole bunch of people to agree with you (i.e. get consensus) you end up just creating an independent network (not that there's anything wrong with that, unless you start scamming people with it)

If I *did* propose this for a fork, it would be a soft fork, not a hard one, as it would require *tightening* the rules, not loosening them. But it would have some of the same *effects* as a hard fork if it was contentious, because contentious softforks (theoretically) split the network into incompatible branches just like hard forks do.

That said, while I don't want to entirely close the door on a soft fork, I think it is wise, for the aforesaid reasons, to just do it in my own mempool, and tell other interested people (if any) how to do it in theirs -- because I don't need consensus for that and I get all the benefits I seek as a result and I also make less people mad.

> The current Bitcoin consensus explicitly allows these transactions, and has for years. To enforce your narrow definition network-wide, you would need to change the fundamental rules of the protocol.

Nice that I don't *want* to enforce my definition network-wide, then. But we've been over similar ground moments ago; perhaps you think that slightly slowing block propagation speed among my peers counts as "enforcing my definition network-wide." If so, I disagree, and I'd particularly like to highlight that this slowdown doesn't even affect how fast my *peers* receive a block unless *my* connection with a given peer would otherwise be their fastest available connection. (If they've got peers A, B, and Me, and peer A is faster than me anyway, then it doesn't matter that I have to download some transactions before serving them a block -- they were gonna get the block faster from peer A anyway.) And, in my personal case, I very much doubt that I am anyone's fastest connection, as I personally operate on pretty bad wifi that I find in hostels and airbnbs.

> This brittle definition would not only freeze Bitcoin's capabilities but would also classify many existing financial tools from multisig to timelocks and covenants as invalid

If applied as a fork, yes, but that's not what I want to do. I only want to apply it in my own mempool, by not speeding along transactions that I find stupid. Good point about multisigs and timelocks, though; if I ever get around to implementing my preferred filter I will try to ensure it allows those, as I do want to help relay such transactions around on the network.

> The arbitrary exception for L2 HTLCs only proves the point: you're not defining spam, you're just green-lighting your preferred use cases

Greenlighting use cases that I "prefer" -- as in, want to see more widely adopted -- is precisely what I want to do in my own mempool. I don't want to help people spam the network; I want to help them adopt layer 2s and sometimes use L1 as money. So I want a filter that supports the latter things -- the things I like and want in my mempool -- and locally blocks the other things -- the things I don't like and don't want in my mempool.

> The arms race is asymmetric: This isn't a battle of diligence; it's a battle of economic incentives. There's a powerful financial motive to embed data, but only a weak, ideological one to filter it.

I suppose a similar thing is true of email spam: the motive to get email spam in front of many eyeballs is more powerful than my motive to block it from my inbox. Nonetheless, email filters are powerful enough to largely compensate for that asymmetry, and I'd like to help design mempool filters that offer similar compensation.

> You're underestimating steganography: You're focused on overt data, but the real challenge is data hidden within what looks like a perfectly valid financial transaction. A filter cannot distinguish intent. To block it, you'd have to block entire classes of legitimate transactions that are valid under today's consensus, which is a non-starter

Filtering entire classes of transactions that are valid under today's consensus is what this entire debate is about. I am enthusiastically in favor of doing so in my local mempool, and sharing what works with others who may have similar interests.

It's so great!!

Too good. 🔥

😂 lmfao

Funniest shit. I haven’t seen the of this meme in so many years.

@npub198au...0p3g only wants the forked coins

Smashed it 🔥🔥👊🏻

Nailed it, had a good laugh! Now lets get back to work plebs :D

Do all BitVM transactions transfer values on L1 or use HTLC to sweep funds?

Let's assume you support a specific soft fork, do you consider it a spam if a Metaprotocol is demonstrating that exact softfork instead of using Liquid?

Not all bitvm transactions transfer values on L1, and not all of them use HTLCs

> do you consider it a spam if a Metaprotocol is demonstrating that exact soft fork

It is spam imo if and only if the metaprotocol's transactions add more data to bitcoin than would otherwise be there, data with a purpose other than the ones outlined in my definition. There's nothing wrong with simulating a soft fork in a metaprotocol, imo. But if, in service of demonstrating that feature, you use bitcoin as a text dump for your metaprotocol's data, that's spammy.

I am not sure I get the distinction... So if Liquid didn't create a sidechain and instead just wrote blocks in the opreturn of a linked list of transactions on Bitcoin is that spam?

A Metaprotocol by definition is written on Bitcoin, otherwise it is a sidechain or Spacechain or a Spiderchain or whatever.

If I am trying to black list misbehaving members of a dynamic federation, it makes tons of sense removing the DA question from the equation.

Another factor is showing the demand of these advanced smart contract evident on block space fee bidding.

Are you saying anything but a hash is spam, or are you saying inconsiderate unnecessary bloated formatting would be spam?

> if Liquid...[used] opreturn...is that spam?

Yes, because in my opinion all op_returns with a data payload are spam

> A Metaprotocol by definition is written on bitcoin

Yes, but it does not necessarily occupy any extra space. For example, the RGB team designed their metaprotocol so that it can "piggyback" on financial transactions that were already going to happen anyway, and only *tweak* the fields in such transactions. That way, the extra data doesn't take up any extra space.

I don't consider that spam. If nodes were going to store the same amount of data anyway by storing the tx *without* tweak data, tweak it, by all means. Then your metaprotocol can have whatever features you want without counting as spam per my definition, because it doesn't increase the amount of data on bitcoin.

> Are you saying anything but a hash is spam

A hash can be spam too, but it depends. The bitcoin protocol supports hashes for things like htlcs. If a metaprotocol used the hash of an htlc that was already doing to happen anyway, and put their metaprotocol hash in it, that wouldn't count as spam in my book.

But if they created an htlc not because it was needed by a bitcoin tx, but because they wanted to put a hash on bitcoin for use in their metaprotocol and couldn't be bothered to piggyback, I would consider that slightly spammy. Though since it's only a hash in one transaction I would also consider it only *slightly* harmful.

RGB doesn't work for everything that you want a Blockchain for, I don't think it works at all to showcase how smart contracts help L2s with fraud proofs etc... for many reasons, but let's just start with the data witholding.

In fact there are zero interesting applications that RGB offers me, I don't want to create tokens. I only care about:

1. Experiments for L2 bridges

2. Possibly satisfying the demand for Stablecoins while still using Bitcoin as backing both for freedom and sustainability of Bitcoin with more value and demand.

Neither of these are possible with RGB as far as I know, the next best thing is BMM but that is why I said that is not Metaprotocol.

BMM is cool but there is a good argument that when you are already battling 2WP, might as well not also fight Data Withholding... Especially when you are aiming for a Metaprotocol (let's call it embedded consensus or embedded chain) that is not expected to require too much data (if you only use it as stepping stone to L2s).

Is adding 10% of extra data really spammy if that is the goal?

If we are affording some grace to Bitvm (which I often see spam concernors do) why not do the same for other attempts to break free of the endless "PrOvE tHe DeMaNd FoR CoVeNaNtS fIrSt".

Of course one can argue that the demand could be proven on Liquid instead ... But I submit to you that people spent money on worthless Ordinals instead of doing the same on Liquid for a reason.

> I don't want to create tokens. I...care about...satisfying the demand for Stablecoins while still using Bitcoin as backing...

Those two statements sound incompatible. Bitcoin-backed stablecoins are tokens. Unless they currently exist, they must be created.

> let's just start with the data witholding

If someone embedded a hash of sidechain block X into bitcoin using an RGB-like piggyback scheme (so that it doesn't take up extra space), but then withheld the data of block X, you could first challenge them to reveal the hash for block X (if they haven't already done so), and then, if they DON'T reveal it, they get slashed, and if they DO reveal it, you can then further challenge them to reveal any transaction relevant to *you* by means of merkle proofs. Once again, if they DON'T reveal it, they get slashed, and if they DO reveal it, the data withholding problem is solved.

No Stablecoins backed by Bitcoin are not mere tokens... There is a whole lending contract necessary to mint them, not just a token you mint and transact with RGB without need for observable chain.

Now, if you take your DA challenge idea to its extreme you will find that it devolves to exactly always embedding blocks on Bitcoin... Because either there is no cost for the challenge in which case I will personally challenge everything just to prove a point, OR there is a high cost for challenging, which can't be refunded because you can never prove the data was withheld so you will just have to pay it.

So if you want a chain to do things that require people to be aware of what is going on, like minting Stablecoins with Bitcoin lending, or with optimistic challengeable bridges etc... then you will need to keep posting the data all the time, except now you also need to publish the challenges, so more "spam".

The other viable alternatives here are to use the same federation of the pegout as the DA committee AKA liquid... Or use merge mining AKA Rootstock (which is way harder to bootstrap).

But if your blocks are meant to be small and infrequent, just embedding them on Bitcoin removes tons of complexity and ton of ways for things to go wrong. And again, if you are trying to do something difficult like exploring covenants or Simplicity... Choose your battles

> if you take your DA challenge idea to its extreme you will find that it devolves to exactly always embedding blocks on Bitcoin

I disagree for reasons about to be discussed

> either there is no cost for the challenge in which case I will personally challenge everything just to prove a point

That seems unworkable and I think we agree on that

> OR there is a high cost for challenging, which can't be refunded because you can never prove the data was withheld so you will just have to pay it

That seems reasonable and does not result in always embedding blocks on bitcoin. Users select a sequencer optimistically. If he attempts to withhold data, users can pay a higher-than-normal cost, which is effectively the cost of a unilateral exit.

The unilateral exit sequence forces the sequencer to reveal the data he tried to withhold or get slashed. Either way, the users exit unilaterally. That bankrupts the bad sequencer, because all his users unilaterally exited to avoid being victims of the data withholding attack. Then those users pick a new sequencer.

Eventually, this process leads to only "good" sequencers remaining. Due to incentive alignment, the process will probably "begin" with only good sequencers as well, since no one wants to go bankrupt, and the only way *not* to be bankrupted is to start out as a good sequencer and remain good.

And the great thing about good sequencers is, they don't need to be challenged, because they don't withhold valid data, so no extra "challenge" data goes on-chain -- or, if it does, it's because of a troll user, who pays the price for trolling due to the higher-than-normal cost of initiating the unilateral exit sequence. Thus only trolls pay that cost, which is desirable, as that eventually bankrupts them, leaving only "good" users and "good" sequencers.

> So if you want a chain to do things that require people to be aware of what is going on...then you will need to keep posting the data all the time

I think the above analysis demonstrates why I think that is incorrect. You can align the incentives properly to bankrupt "bad" sequencers and trolls, such that only "good" sequencers and "good" users are left -- i.e. the ones who follow the protocol in part to avoid going bankrupt.

> No Stablecoins backed by Bitcoin are not mere tokens... There is a whole lending contract necessary to mint them, not just a token you mint and transact with RGB without need for observable chain.

I didn't say bitcoin-backed stablecoins are "mere" tokens. I just said they are tokens, which you seem to admit.

If I have to deal with DA uncertainty there would be way simpler solutions, like just using BMM then require 13000 confirmations in the sidechain before withdrawal like in Drivechains but this time with a STARK proof to take validity out of the equation.

And indeed that is how I think scaling should happen. But scaling isn't the goal of what I am suggesting, instead the goal is to extend Bitcoin smart contracts to make it easier for all kinds of L2s to hook into Bitcoin... With that goal in mind not having to deal with DA, keeps the challenge limited to bootstrapping a dynamic federation to deal with escrows in the absence of a soft fork.

I don't think this is unreasonable, but also, it is not a democracy, data censorship soft forks are going to fail even harder than covenants

There are many advantages of just BMM with delay for withdrawals... Not least of which is the cost of bitcoin fees.

But if you want an escrow to be comfortable knowing that no one can claim they misbehaved, what alternative do you have to perfectly visible data + immutable consensus?

Let me rephrase this; Any escrow helping someone withdraw, can't be passive if the data used in the withdrawal isn't on chain, because they will have to keep that data availabile out of band, for future withdrawals... Because once you withdraw from a fork this fork can't be reorged without double spending.

So now either the escrow need to keep providing data availability or keep acting as watchtowers challenging withdrawals.

Both are obviously way worse than asking them to run a stateless pure function in a TEE just to enforce the bridge.

I think my previous posts in this thread outline a safe solution to the data availability problem. By protocol, a sequencer merkelizes every sidechain block and posts the root on bitcoin via a piggyback mechanism. If he is a good sequencer, he also broadcasts the full block data on a secondary platform, such as his website. Users use that data to create transactions that update their state.

If the sequencer ever withholds data, any user can start a challenge that forces the sequencer, at risk of getting slashed, to reveal a portion of the latest blockchain data. Specifically, the sequencer must reveal the portion involving that user's latest state, and then, whatever that state indicates about the user's balance, the sequencer must pay it out to the user on L1, if the user agrees that it is the right state.

If the user claims the state has the wrong amount, the user can issue another challenge in which they present their "real" latest state and challenge the sequencer to prove a valid state transition which reduced the user's balance with their approval. The sequencer cannot do this if the user is honest, so they get slashed; or, if the user is dishonest, the challenge ends with no bad consequences for the sequencer.

To disincentivize users from constantly issuing troll challenges, users must pay the cost of putting all challenge data on chain. If the sequencer does not post valid data, the sequencer gets slashed, and the user exits possibly even richer than he was before. If the sequencer posts valid data, the user has now exited and the data withholding attack was repeled by forcing the sequencer to reveal it or get slashed. The user paid extra for this "unilateral exit," but it is common for unilateral exits to cost more than cooperative ones, so that is fine imo. If the sequencer is unreliable about revealing valid data, he will lose his customers with no gain, and if he is reliable, none of this happens in the first place, because no one has an incentive to issue a costly challenge if the sequencer is doing everything right.

For more details, I have a writeup here:

Telegraph

A forthcoming problem with bitvm

Introduction

In the past few months, several bitvm companies announced preparations for launching on mainnet, and I began to research their plans. ...

I think you are not noticing what I am saying; whoever slashes the sequencer could have been themselves the Data Availability Committee and sign on all blocks... Unless you mean slashing with BitVM which means the data has to be posted with BitVM hacks... In which case I check out.

Either way, it is not clear why should we bother with introducing a challenge system for DA, when we already have a proof of publication, and we would be using it not to post dick butts but to basically add something that everyone agrees is needed.

Is it really better to post tons of inflated data with BitVM challenges, than just write contracts onchain and hold the escrows accountable that way.

I didn't even mention the effect of watching the demand for smart contracts evident on chain.

Anyways, I think I understand your position now, thanks.

I think your main issue with using L1 for DA, is that you think people are trying to add scalability... No, people are trying to have usuable smart contracts, then do all the fraud proofs or validity proofs or proof of stake and any tricks they might need for scaling there, with actual smart contract language that is not as awful as BitVM (which by the way forces people to use ZKVMs that are far from water tight)...

So it doesn't matter that the "side"'chain will be expensive, it is not meant to compete with LN it is meant to make L2s nicer to develop+ enable things like Stablecoins and vaults ... All usecases for large transactions anyways.

> Is it really better to post tons of inflated data with BitVM challenges, than just write contracts onchain and hold the escrows accountable that way

Using bitvm doesn't require posting tons of data anymore. Ever since the realization that bitvm can use garbled circuits, two benefits emerged: (1) you only need to post data in the sad path and (2) the data only needs to be one or two standard preimages and hashes -- nearly the same amount of data required to resolve a lightning htlc on L1. That is far better than writing every smart contract on chain. It is cheaper for end users and less spammy for nodes.

> you think people are trying to add scalability... No, people are trying to have usuable smart contracts

I thunk they are trying to do both, and I think my proposal is the cheapest way to do both proposed so far, and as a result, also the least spammy.

> [they want an] actual smart contract language that is not as awful as BitVM

Bitvm allows you to use any smart contract lamguage you want. Normal devs are not expected to write their programs in binary circuits, that's what compilers are for.

> [bitvm] forces people to use ZKVMs that are far from water tight

I am unfamiliar with this criticism, do you have more details or a link to where I can read more?

No this is not true, every time a withdrawal is attempted with withheld data, everyone will merkle proofs, but many contracts that aren't owned by anyone in particular will also need the entire data in that contract and not just the last utxo.

For example if a 100 blocks were created in the sidechain, and each block contained a OP_CCV like state update, these updates could be an append only log, meaning all these updates are necessary (for example these updates could be themselves proposed state updates that only settles after they are enough txns deep, or simply chunks across blocks)..

You need all this data to continue the sidechain... And even worse you also wanted to see these updates in a timely manner... You can't just wait till they are dumped on you.

So, 1) it is not the sad path of withdrawal with withheld data, proof of publication is necessary in the average case... You don't even need to think of the Defi, even vaults need this.

2) BitVM data carrying suuuuucks... You are most probably going to end up on the average case spending more money and bloating the chain more.

You are probably correct if all you are trying to do is replicate Lightning but with many users... I.e just transfer of fungible tokens... But as I said, this doesn't suffice.

You are welcome to build it though, it is great that what you are happy with doesn't require new consensus

Actually your proposal is worse than just using Rootstock, because Rootstock already solved DA by merge mining... Or just Spacechains with BitVM bridge ... Rollups without a good DA solution is basically an exchange with some handwaving. Again you can't just make smart contracts by promising that you can ask for your merkle proof before a contested withdraw... You need data availability all the time. Not so much for something like Ark, but definitely for the rest.

BitVM is basically a 1-of-N multisig + endless opportunities for bugs on every novel step, from the garbled circuits to the data embedding and of course the ZkVMs which are necessary if you are not going to write circuits by hand.

If you must deal with all this, why on earth deal with DA too? Built a bridge to a BMM sidechain at least to have a decent chance of DA ... Choose your battles.

And as you can see ZKVMs are dumpster fire, and even the developers aren't hiding it, they just tolerate it because in Ethereum they have smart contracts allowing for upgrades after demonstrating a bug... if you want the same in Bitcoin you will basically need a regular ass multisig "emergency federation".... Sooooo we are just torturing ourselves for no reason?

https://hackenproof.com/reports/RISCZKVM-25

> Rootstock already solved DA

I think my proposal is superior to theirs because it also solves the DA problem but puts less data on the blockchain

> you can't just make smart contracts by promising that you can ask for your merkle proof before a contested withdraw... You need data availability all the time

My proposal gives you data availability all the time. The moment the sequencer tries to withhold data, that's the moment when you issue the challenge and force them to reveal the data or get slashed. They can never withhold data without undergoing such a challenge, whereupon it gets revealed or you get your exit. Since they can never withhold data, the data is always available.

That is simply wrong, because you are making the assumption that the user will only demand their own data... But again you need all the data, and the nature of the challenge means that you can't challenge for one utxo at a time, you need to make sure the challenger pays for the reveal of the whole thing... Which will be way more expensive than putting many many many many blocks in opreturn.

Also your proposal is inferior to Rootstock or BMM in sooo many ways, not the least that you have to setup BitVM with everyone who is allowed to create blocks and in BitVM3 users supposed to trust that the garbled circuits were setup correctly and the covenant committee won't cheat... That is basically a trash can on fire compared to BMM or normal MM where anyone can mine (and thus implicitly challenge DA by building on top of other blocks)... And anyone can trust that.

If you think your proposal is better than 80 bytes per block, then of course you wouldn't see why putting the entire sideblocks in Bitcoin has a lot of value... You are a bit extreme here.

Actually I am glad you mentioned data on chain as a con for merge mining... That basically tells me there is no point trying to be considerate and only fees should be taken into account, there will always be someone who doesn't like even an 80 bytes extra per block fully paid for.

Miners will appreciate the extra fees though if the embedded block can have enough demand to pay for it.