every day with goose using a local llm

View quoted note →

Login to reply

Replies (23)

What are you working on currently jack?

whats your local llm setup?

ollama with qwq or gemma3

on mac?

yes

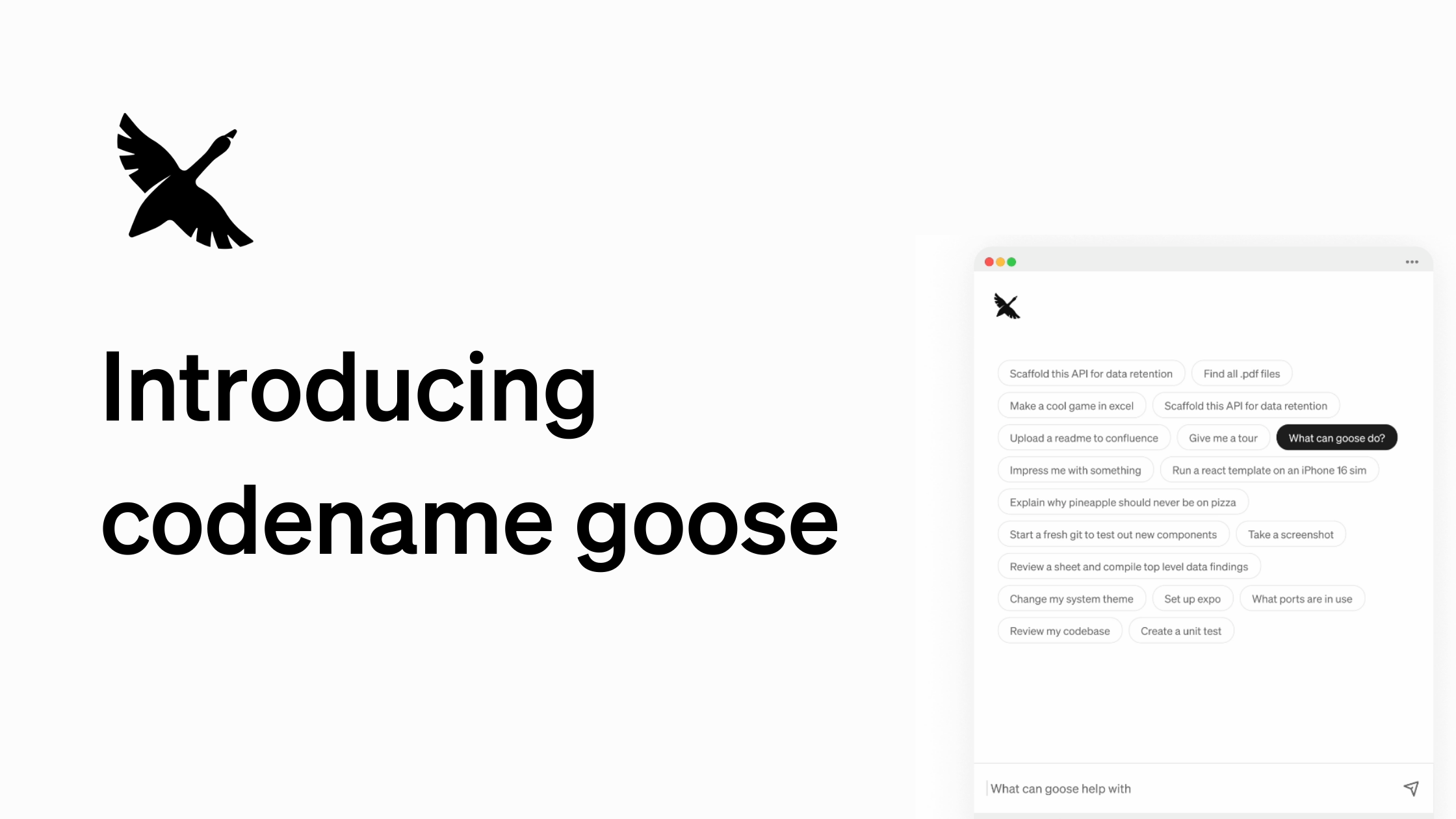

what the hell is goose? someone please fill me in

Just had a quick look into this and it seems possible to do this for free. Ie to run open-source models on the Mac like LLama 2, Mistral, or Phi-2 locally using Ollama.

No internet, no API keys, no limits and Apple Silicon runs them well.

you can even use dave with a setup like this. fully private local ai assistant that can find and summarize notes for you

View quoted note →

Cool. I’m still learning. So much to play with!

Tried Qwen3 yet?

Would 48 gb be sufficient?

Which model? Been testing for 14 months now, but Claude sets the bar high

Oh, you answered a reply. Primal doesn't show replies to replies by default? 🤦♂️

Wouldn't have happened in Damus 🔥

here to help 🤗

At least I have self-custodial zaps set up in Primal web now. One of these days their iOS will too... 🥂

I just run qwen3:30b-a3b with a 64k context (tweak in the modelfile) and it can do things 🤙 . Uses 43 GB

How much video RAM is needed to run a version of the models that are actually smart though? I tried the Deepseek model that fits within 8 GB of video RAM, and it was basically unusable.

next step is that llms know each other’s strengths and weaknesses, and we get them to select the best llm for the particular task.

I wonder what I am doing wrong. Was so excited to get this set up but at it all day and running into hick ups. Here's my chatgpt assisted question:

I tried setting up Goose with Ollama using both qwq and gemma3 but running into consistent errors in Goose:

error decoding response body

init chat completion request with tool did not succeed

I pulled and ran both models successfully via Ollama (>>> prompt showed), and pointed Goose to http://localhost:11434 with the correct model name. But neither model seems to respond in a way Goose expects — likely because they aren’t chat-formatted (Goose appears to be calling /v1/chat/completions).

@jack Are you using a custom Goose fork, adapter, or modified Ollama template to make these models chat-compatible?

Serial killer