Hello, fellow Nostriches.

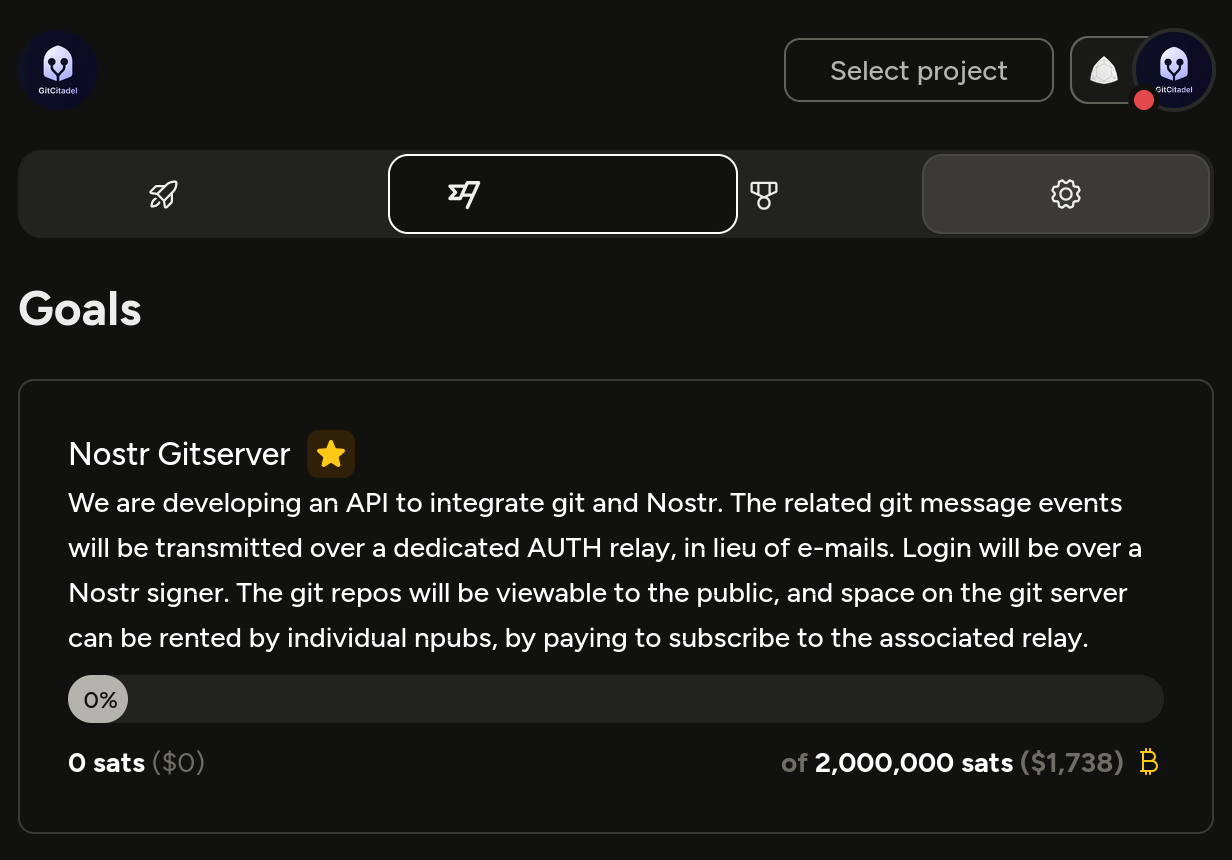

We at #GitCitadel have spent this week pricing out remote servers and brainstorming; considering the most cost-effective, but still performant, way to implement a public #gitserver based upon Nostr. In order to help us front the rental fees and lower the price to individual npubs, we have begun a new goal.

The resulting server will have multiple #gnostr implementations and tools on it. We are planning to begin with:

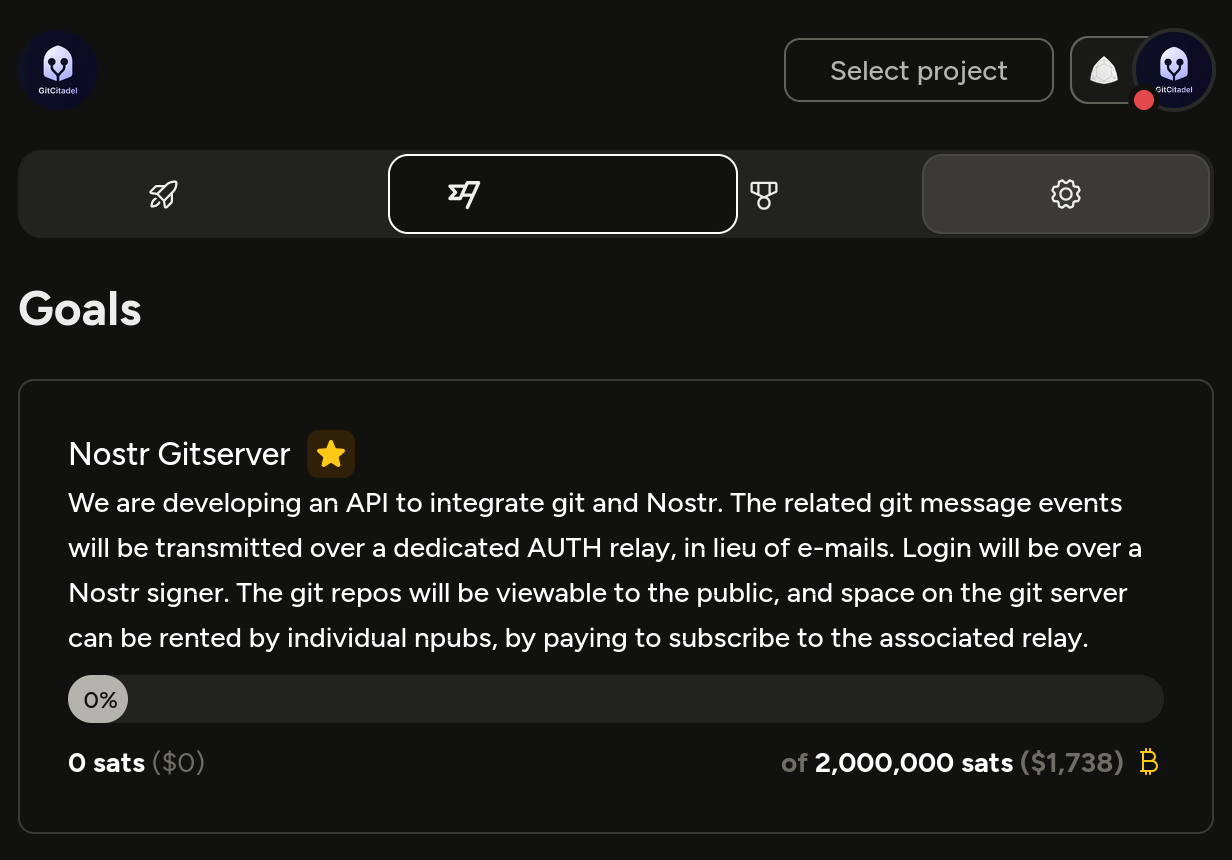

* the #GitRepublic API, that will make it possible to log in to git with your Nostr signer, and will trade out e-mail communication with Nostr notes,

* an HTTP-based git server (the first thing that will be going online, so that everyone can move or mirror their repos),

* a paid AUTH relay https://gitcitadel.nostr1.com (currently 5000 sats/month, to cover all the running costs, but we hope to bring that down dramatically, due to your donations),

* an #Alexandria instance focused on technical documentation and styled for the dev users,

* and an instance of  that displays the repos on the server.

It's difficult to compete with a "free" public service, like GitHub or Codeberg, but we're hoping that tighter integration with Nostr will add some value to the developers using it, and for the public interested in browsing the PoW stored on it. Through the integration of Nostr and Lightning, we are leaning into the comfortable, Nostrized, friction-free logins, and will facilitate V4V payments for Nostr's hard-working devs.

Many thanks in advance.

And may you have a good morning.

that displays the repos on the server.

It's difficult to compete with a "free" public service, like GitHub or Codeberg, but we're hoping that tighter integration with Nostr will add some value to the developers using it, and for the public interested in browsing the PoW stored on it. Through the integration of Nostr and Lightning, we are leaning into the comfortable, Nostrized, friction-free logins, and will facilitate V4V payments for Nostr's hard-working devs.

Many thanks in advance.

And may you have a good morning.

Geyser | Bitcoin Crowdfunding Platform

A Bitcoin crowdfunding platform where creators raise funds for causes, sell products, manage campaigns, and engage with their community.

GitWorkshop.dev

Decentralized github alternative over Nostr