High Thinking Velocity flow:

1. Voice-In, Thought-Out:

• Convert incoming spoken language into high-quality text via a state‐of‐the‐art TTS system.

• Process user voice inputs instantly so that ideas are captured in real time, reducing the cognitive overhead of typing.

2. High-Performance, Adaptive LLM:

• Use an LLM tuned for clarity and speed—one optimized for summarization and rapid Q&A—to rapidly distill and generate comprehensible content.

• Fine-tune the LLM for context-based understanding, ensuring tailored responses for diverse task domains while leveraging both active learning and reinforcement strategies for continuous performance enhancement.

3. Seamless TTS Feedback Loop:

• Immediately convert the LLM’s generated text back into spoken words through an ultra-low-latency TTS engine so that users receive auditory feedback that syncs seamlessly with their thought process.

• Enable an iterative dialogue where the user’s spoken clarifications feed back into the system, creating a dynamic loop that refines content, sparks inspiration, and reduces friction in thought articulation.

4. Synchronized Integration for High Cognitive Throughput:

• Architect the system to ensure nearly instantaneous transitions between user voice input, text processing by the LLM, and auditory output—all synchronized to maintain the natural rhythm of thought flow.

• Optimize hardware and software pipelines (e.g., using edge computing or dedicated TTS/LLM accelerators) to reduce processing lag and prevent any cognitive disruption or workflow “hiccups.”

4. Enhanced Cognitive Flow & Iterative Refinement:

• Incorporate real-time feedback and clarification suggestions, allowing the user to continuously refine their input as the LLM learns contextual nuances through active interaction.

• Employ adaptive reinforcement methods so that the system evolves with each interaction, bringing increased clarity, precision, and efficiency with every cycle of dialogue.

Login to reply

Replies (1)

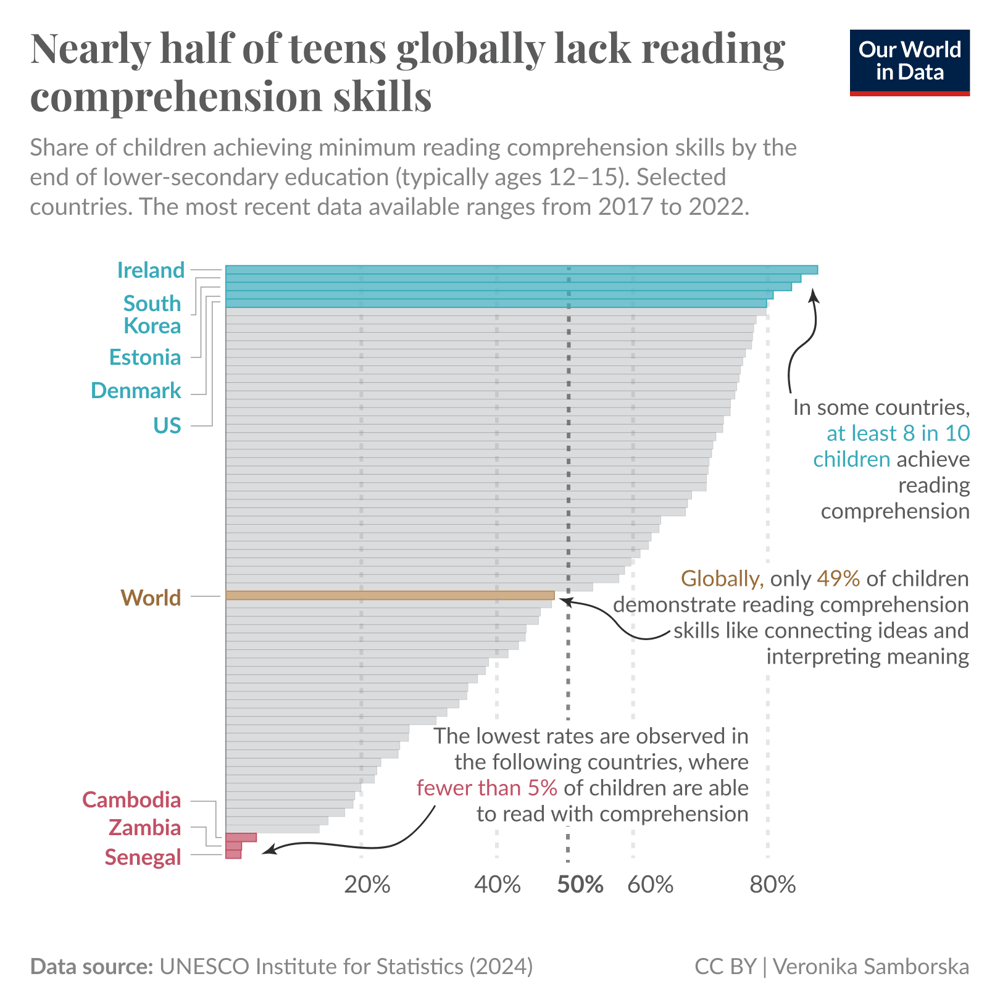

Nearly half of teenagers globally cannot read with comprehension.

Our World in Data

Nearly half of teenagers globally cannot read with comprehension

The chart shows the share of children at the end of lower-secondary school age — aged 12 to 15 — who meet the minimum proficiency set by UNESCO...