the new rust-nostr sdk api is awesome, kudos @npub1drvp...seet

Login to reply

Replies (34)

Hi @npub1drvp...seet . I'm wondering if it's fine to use `.clone()` for the `client` instance whenever I need to use it?

I noticed that when I enabled <gossip>, there's a huge delay in receiving events after subscribing. However, it works fine if I keep <gossip> disabled. I thought `.clone()` might be affecting this, but I'm not sure, so I ended up implementing my own gossip mechanism for my app.

No, clone doesn't affect that, it's fine to use it. Have you tried the SQLite gossip storage, instead of the in-memory one?

@reya in addition to the SQLite gossip store, also this PR should help:  If you have the opportunity to test it, let me know if it's faster.

If you have the opportunity to test it, let me know if it's faster.

GitHub

sdk: improve gossip concurrency with per-key semaphore system by yukibtc · Pull Request #1250 · rust-nostr/nostr

Replace the global single-permit semaphore with a per-key semaphore, improving updates concurrency.

Add unit tests to ensure the system is deadlock...

can you be more concrete, please? name one good thing about it

pretty hard starting from that 4 letter word in the name

"reya"? "yuki"?

ah, you haven't learned yet about my hate for mozilla's sponsored hipsterlang. RUST.

also i said "title" nostr-rust

ok, "name" of the library.

it's a good language

it's hard to read, overly complex, and abuses the use of MMU immutability flags for no reason. and there is no coroutines or atomic fifos (channels) and it takes as long to compile as C++

nostr-rs-relay takes almost exactly as long to build as strfry, and my relay, from scratch, with a totally cleared out module cache, takes about 35 seconds. those other two relays take 11 minutes on a 64gb system with SSD and 6 core 12 thread ryzen 7.

if you had got used to the 3-5 second typical rebuild time after editing code that i have been doing for the last 9 years, you would laugh in Golang.

there are coroutines and atomic channels in tokio, compilation times are faster now, and it can be hard to read, but not necessarily

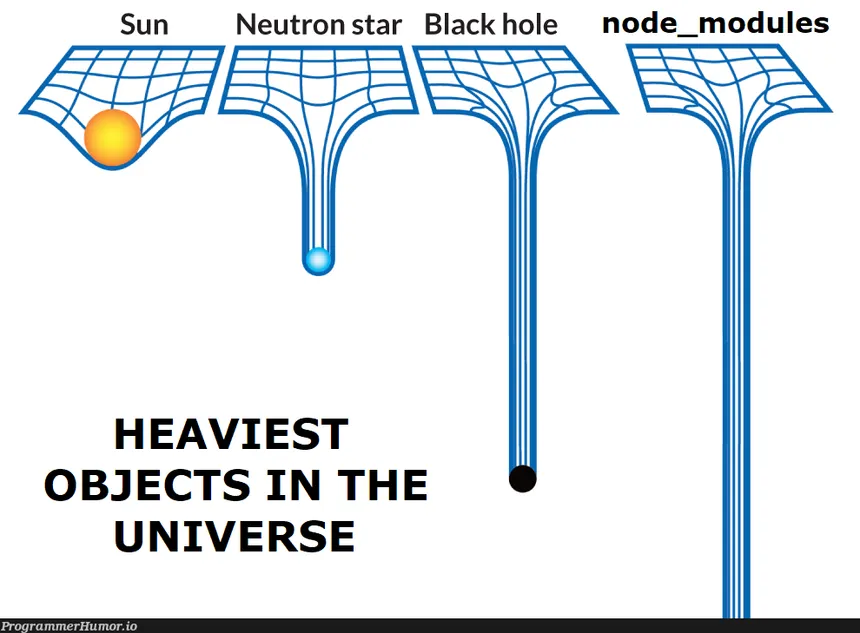

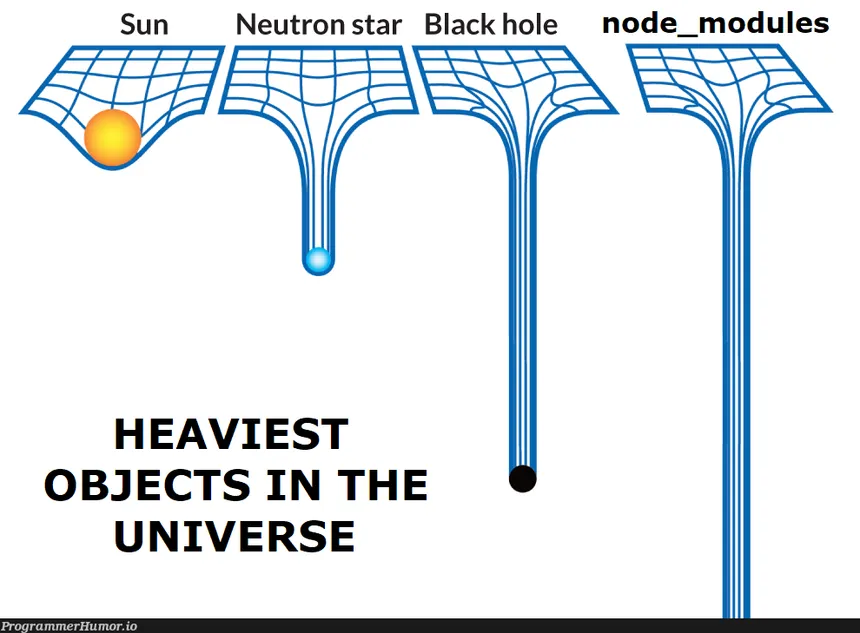

compilation times are only faster if you eliminate the download and compilation of the dependencies. sure, you only have to deal with it one time, but i'm just gonna say, that the cargo build system is almost a carbon copy of the system that Go uses, except it clutters up your repos with `target` directories that are typically in the gigabytes, not dissimilar to node_modules

and all this, for a 5% performance advantage over a language that does the same thing for nostr-rs-relay versus khatru, orly or rely, which actually both, according to benchmarks i made about 5 months ago, before i started vibe coding, both strfry and nostr-rs-relay are down the bottom of the rankings.

so, uh, yeah. if you can optimize the code well, sure, but 5% boost in performance and peak memory utilization

it's not worth it. and this is also why i abandoned working for shitcoin companies because from 2022, they all abandoned my fast, sleek language in favor of this hipster tire fire.

and all this, for a 5% performance advantage over a language that does the same thing for nostr-rs-relay versus khatru, orly or rely, which actually both, according to benchmarks i made about 5 months ago, before i started vibe coding, both strfry and nostr-rs-relay are down the bottom of the rankings.

so, uh, yeah. if you can optimize the code well, sure, but 5% boost in performance and peak memory utilization

it's not worth it. and this is also why i abandoned working for shitcoin companies because from 2022, they all abandoned my fast, sleek language in favor of this hipster tire fire.

and all this, for a 5% performance advantage over a language that does the same thing for nostr-rs-relay versus khatru, orly or rely, which actually both, according to benchmarks i made about 5 months ago, before i started vibe coding, both strfry and nostr-rs-relay are down the bottom of the rankings.

so, uh, yeah. if you can optimize the code well, sure, but 5% boost in performance and peak memory utilization

it's not worth it. and this is also why i abandoned working for shitcoin companies because from 2022, they all abandoned my fast, sleek language in favor of this hipster tire fire.

and all this, for a 5% performance advantage over a language that does the same thing for nostr-rs-relay versus khatru, orly or rely, which actually both, according to benchmarks i made about 5 months ago, before i started vibe coding, both strfry and nostr-rs-relay are down the bottom of the rankings.

so, uh, yeah. if you can optimize the code well, sure, but 5% boost in performance and peak memory utilization

it's not worth it. and this is also why i abandoned working for shitcoin companies because from 2022, they all abandoned my fast, sleek language in favor of this hipster tire fire.I cannot use sqlite yet, because it's depend on tokio

I got this issue after update to this commit:

/src/client/gossip/semaphore.rs:137:9:

there is no reactor running, must be called from the context of a Tokio 1.x runtime

Hi @npub1drvp...seet, I wonder if you could re-add the `resubscribe` method for relays? My app is manually handling authentication requests, but all methods related to `resubscribe` aren't exposed in the new API.

Out of curiosity, why are you manually handling auth requests? Are there issues with the SDK implementation?

I want to give users full control over the flow. Users can approve/reject auth requests. You can check the code here: https://git.reya.su/reya/coop/src/branch/redesign-2/crates/relay_auth/src/lib.rs

Ahh, with the AdmitPolicy::admit_auth is possible handle the approve/reject flow while using the SDK impl:

GitHub

sdk: add `AdmitPolicy::admit_auth` to control relay authentication by yukibtc · Pull Request #1218 · rust-nostr/nostr

Closes #739

nice, i will look into it.

Hi @npub1drvp...seet

I wonder if you have any plans for using async_utility for the gossip-sqlite crate? I can work on this, it would temporarily allow me to use it in my app.

Yes, I've starter working on it a couple of days ago. The PR:  I'm working on making all the libraries runtime-agnostic. Hope to have a PR ready soon.

I'm working on making all the libraries runtime-agnostic. Hope to have a PR ready soon.

GitHub

Replace `sqlx` with `rusqlite` by yukibtc · Pull Request #1252 · rust-nostr/nostr

This change is needed for:

support any kind of async runtime (Multi-runtime support #921)

Add wasm32-unknown-unknown support to nostr-sqlite and ...

It looks like nostr-sdk automatically runs negentropy sync when relays are added. I think this causes a bottleneck in my app and creates significant delays. My app flow works like this:

1. Users launch the app

2. The app connects to bootstrap relays

3. The app gets the user's relay list and verifies its existence

4. If the relay list exists → Get profile → Get and verify messaging relays

The flow is simple and runs step by step, so I don't think the backend affects it much. If I disable gossip, it runs fine.

I've done some debugging. After I create subscriptions, the relay responds immediately, but somehow the notification channel isn't receiving events yet.

I've adjusted the `ok_timeout/timeout` to 1 or 2 seconds, and now everything seems fast, as if gossip is disabled. I think there are some bottlenecks when handle notification, cc @npub1drvp...seet

Does this happen with the in-memory gossip store, or with the SQLite one? Or both?

Both. I'm just tested the sqlite backend, it still have same issue.

Hi @npub1drvp...seet ,

I think I've found the problem here. It looks like the SDK is waiting for the timeout to finish before returning the result, instead of returning immediately after receiving the event from the relay.

I've created a simple flow like this:

1. Spawn a task to listen for notifications via `client.notifications()`

2. In another task, send an event to the relay and get the output: `let event = client.send_event(event).await?`

3. You will see the notification in task <1> receive the OK event from the relay immediately.

4. However, in task <2>, the output only returns after 10 seconds (the default timeout).

Sorry for put the issue here. My GH account is locked, I will create another one later.

Forgot attach screenshot.

Thanks, I'll check it, but I think that it's due to an unreachable relay that has just been added to the pool.

The disconnected relays are skipped (return error instead of waiting) after the second failed attempt.

To confirm it, can you try to decrease the connection timeout, configurable via the Client Builder? (I've added this feature yesterday in master branch).

Or, have you a small code snippet to reproduce it? And what relays did you used?

Regarding the issues, are perfect here on nostr.

But instead of using the text notes, the NIP34 would be better. Here is the repo:  For desktop you can use gitplaza, by @dluvian

For desktop you can use gitplaza, by @dluvian

GitWorkshop.dev

Decentralized github alternative over Nostr

Codeberg.org

gitplaza

Decentralized GitHub alternative on desktop

I've tried this API. It looks like I need to add a relay to the AdmitPolicy first to make it work. However, in my app, when receiving an auth request, the user will take action before the auth response can continue to be processed.

Also, with automatic authentication, the SDK doesn't prevent duplicate auth requests, which causes some issues.

The URL in the AdmitPolicy is to let you know from with relay the AUTH request is coming.

You don't have to add it, it's added by the SDK.