Big miners have been and are going to do this anyway regardless of what nodes want, because nodes already accept much larger OP_RETURN sizes in mined blocks

... nodes already accept much larger OP_RETURN sizes in *mined blocks* ...

This change *is not* about changing about what nodes accept in blocks (consensus)

It's changing what nodes gossip about pre-block

It's saying that if nodes ignored the gossip, then folks will just tell the miners directly at a higher cost that they are so far happy to pay (shitcoin economics, see BRC20 vs. Runes)

"Core" is saying that pretending to not listen to gossip when you're going to accept the block anyway does not meaningfully stop spam, but it does affect miner centralization

Ironically, the only thing "Core" is forcing is a change that would actually hurt miners by reducing preferential miner fees and opportunities for miner centralization through degraded block relay

Ironically, the thing they are forcing is better for decentralization (accurate mempools, predictable fee estimates, less miner centralization pressure)

____

These are the arguments as I understand them so far, but I do agree that communication was handled poorly and that they should never the option to configure in and not force a single setting on nodes

But I also agree that the default should help decentralization and network health. So I agree that raising the default size of OP_RETURNs that nodes *gossip* about with an option to configure is good, given that there is already a much larger limit nodes will already accept in mined blocks today

None of these are consensus changes that can result in any sort of fork though. Nothing here changes what a valid mined transaction is. So comparisons to blocksize war don't really make sense to me because it's not the same game theory at all

Login to reply

Replies (3)

thanks for clarifying this misunderstanding

isnt the point making it more expensive already. making them go to the miners directly is already the point.

and also filters dont effect fee estimations in a meaningful way. fee is not calculated based on what you have on your mempool that's not correct. even core is using more advanced methods to estimate fee. its just an argument.

and also everyone's mempools is not supposed to be same already.

filters are changes nodes can make without causing a network fork. we need more options for filters, allow people to write their own filtering scripts and rules.

and when miners dont have similar filters to the rest of the network it takes longer for their blocks to propagate over the network, and if another miner finds another block in that time their block is ignored. which makes going against the network cost even more.

decentralization doesn't mean everyone is copy of each-other. its normal for something decentralized to be messy slow, clunky and not butter smooth. that's the point. pure chaos of disagreement, but of course in the limits of consensus.

and some of them talked about changing the consensus for this.

but changing consensus for something dynamic wouldn't make sense. consensus is the untouched law, and constitution, and filters are the community.

what filters nodes run collectively decides what is more expensive/harder to do in this network.

which keeps unwanted stuff niche and not go mainstream, thats the point. not a great analogy but its like the "unwritten rules".

funny thing is, they proposed this exact PR 2 years ago, it got rejected because people said, we can fix new taproot exploit by updating and adding filters.

BUT funny part is that PR for the filters also got rejected because "its controversial".

but suddenly this new PR is not?

they knew this would happen, they rejected every filtering solution. so 2 years later they can propose it again. when the issue has gotten bigger. they are giving solutions to issues they caused. because their agenda from the beginning was allowing more data. they planned this. they say it on their other PR, i think it was something like "we can try again later when utxo set is bigger".

> fee is not calculated based on what you have on your mempool that's not correct. even core is using more advanced methods to estimate fee. its just an argument

I'm sorry, you must be joking

In core, fee estimates are calculated entirely from the mempool. This isn't even up for debate, it's literally in the code.

- The `TxConfirmStats` class ([here]( builds buckets of mempool transactions based on fee rates and then analyzes how long they take to be mined.

- If you unravel `TxConfirmStats::EstimateMedianVal` ([here](

builds buckets of mempool transactions based on fee rates and then analyzes how long they take to be mined.

- If you unravel `TxConfirmStats::EstimateMedianVal` ([here]( the code scans buckets of stored feerates built **from the mempool** and gives you the median feerate for the bucket that most closely matches your confirmation criterion.

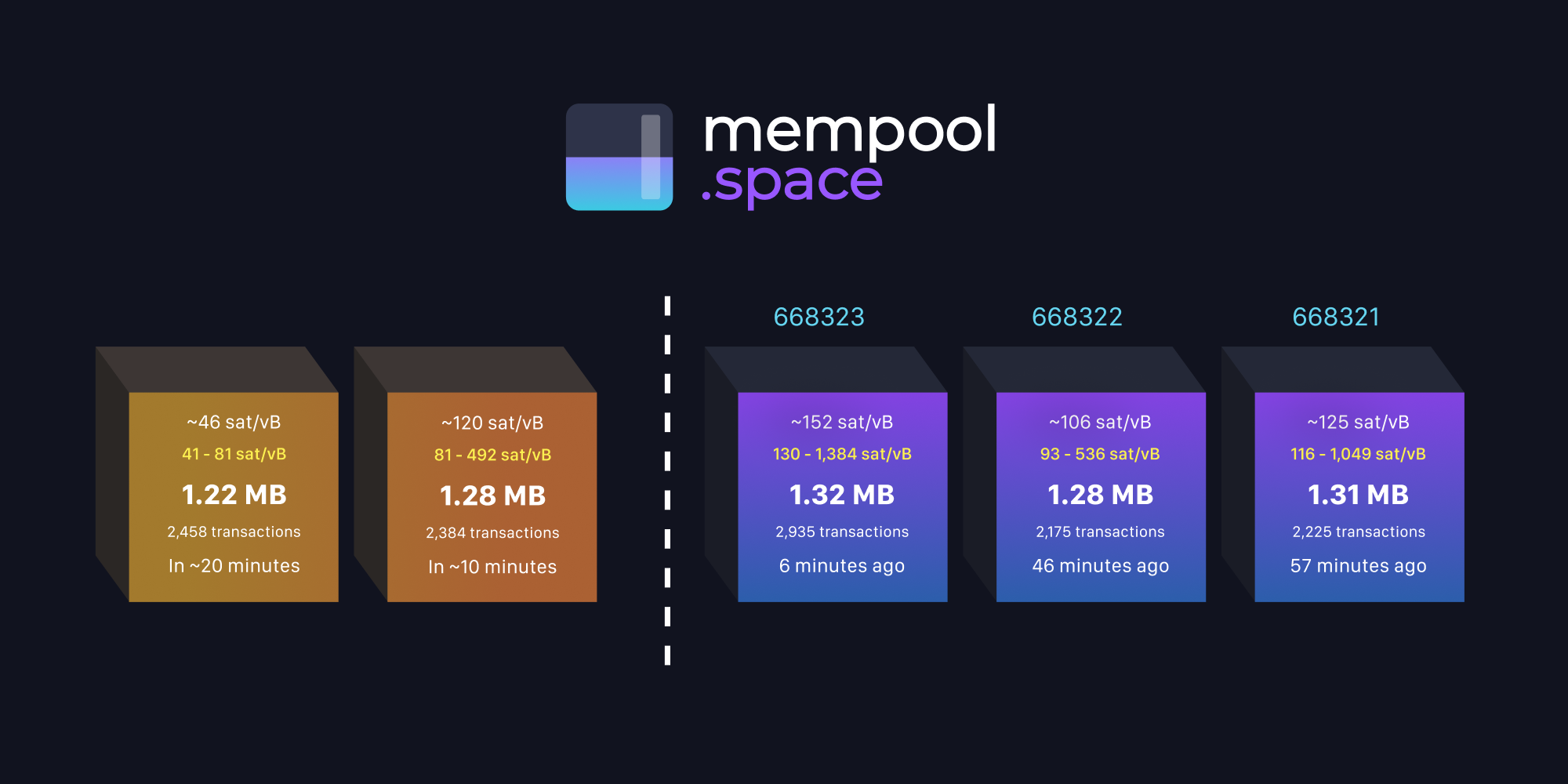

It's the same for other estimators as well. We rely primarily on the mempool.space estimator in the `bria` engine we use for Blink ([here](

the code scans buckets of stored feerates built **from the mempool** and gives you the median feerate for the bucket that most closely matches your confirmation criterion.

It's the same for other estimators as well. We rely primarily on the mempool.space estimator in the `bria` engine we use for Blink ([here]( as it's the most reliable we've found from years of observed transaction activity. And if you go to the mempool.space repo and look at how they do fees, `getRecommendedFee` is called ([here](

as it's the most reliable we've found from years of observed transaction activity. And if you go to the mempool.space repo and look at how they do fees, `getRecommendedFee` is called ([here]( which if you follow the code uses mempool transactions and projected mempool blocks to estimate fees.

I think this is the issue lots of us are having with this "debate". Lots of things are being said that aren't even subjective, just objectively wrong.

which if you follow the code uses mempool transactions and projected mempool blocks to estimate fees.

I think this is the issue lots of us are having with this "debate". Lots of things are being said that aren't even subjective, just objectively wrong.

GitHub

bitcoin/src/policy/fees.cpp at 8309a9747a8df96517970841b3648937d05939a3 · bitcoin/bitcoin

Bitcoin Core integration/staging tree. Contribute to bitcoin/bitcoin development by creating an account on GitHub.

GitHub

bitcoin/src/policy/fees.cpp at 8309a9747a8df96517970841b3648937d05939a3 · bitcoin/bitcoin

Bitcoin Core integration/staging tree. Contribute to bitcoin/bitcoin development by creating an account on GitHub.

GitHub

bria/src/fees/client.rs at 018f25b52f991886f1c6ee9713cee4ee641e8187 · GaloyMoney/bria

Contribute to GaloyMoney/bria development by creating an account on GitHub.

GitHub

mempool/backend/src/api/fee-api.ts at cba4308447341725587c232f77c102efc834d488 · mempool/mempool

Explore the full Bitcoin ecosystem with mempool.space, or be your own explorer and self-host your own instance with one-click installation on popul...