Now that OpenAI is officially VC-funded garbage, what are the best self-hosted and/or open-source options?

Cc: @Guy Swann

Login to reply

Replies (14)

Anthropic, Perplexity is still in good sights with me. Otherwise, maybe Ollama could do what you need.

Awesome. Thanks!

Venice.ai and select the llama3.1 model. Great option for a big model that you can’t run locally.

Otherwise a local llama3.1 20B is solid if you have the RAM

$50/year + pay with Bitcoin 👀

Very helpful! Investigating both for work where we have great hardware and for home so this is great to know

local grow! t-y Guy Swann

I insta-paid the yearly, lol

Honestly can’t tell the difference between Venice, anthropic, and ChatGPT except for I know I hit the context window a little faster on Venice than the others.

But output is generally great. In fact I used OpenAI the other day for chapter markers on my YouTube video and they were completely nonsensical. It was all topics we discussed, but it had exactly zero matching of the time codes despite being throughout the file. Llama (Venice) did a better job, but stopped early on the file because it was a huge transcript. Had to cut it up.

Appreciate the context, no pun intended. For 50 bucks a year, still, I am in.

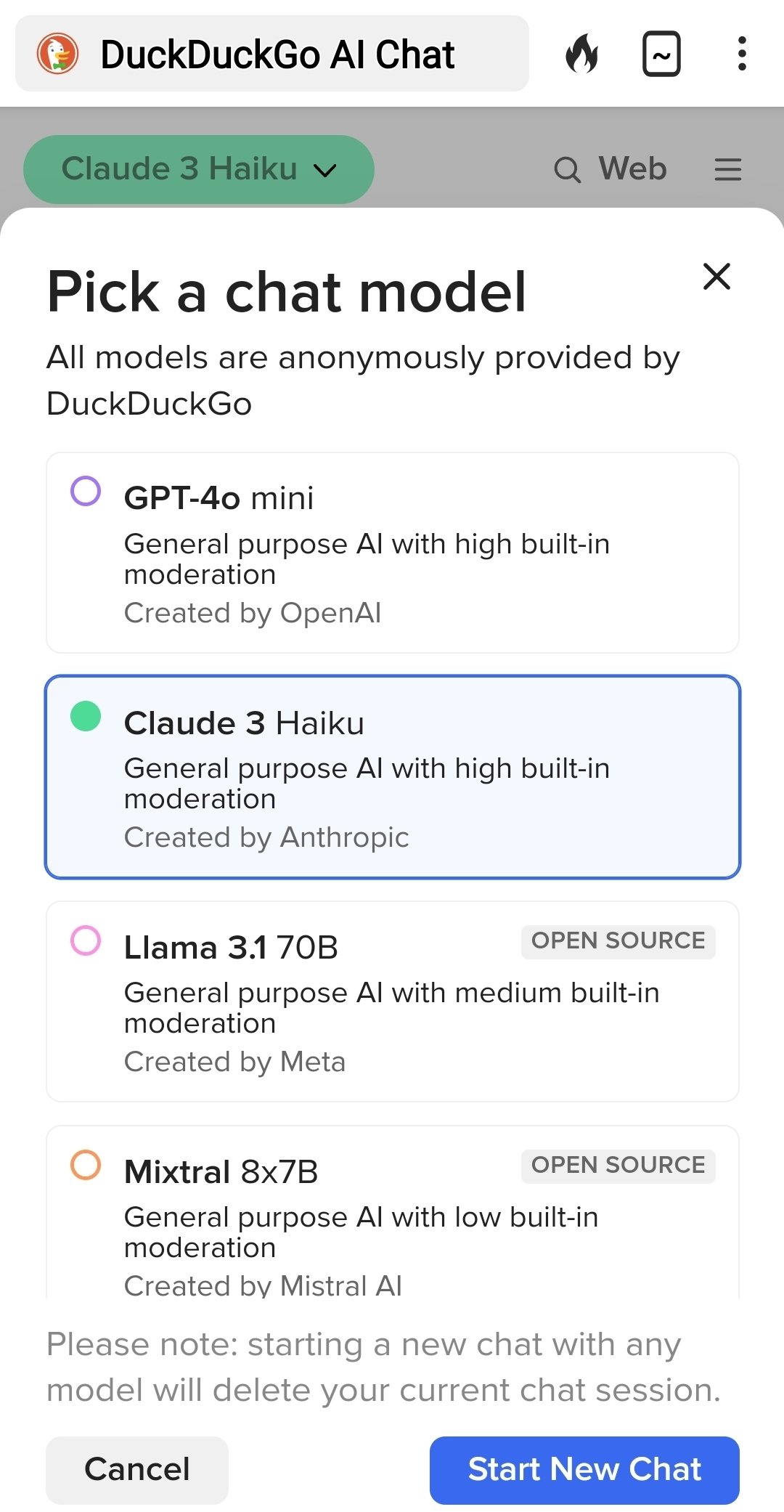

Duck Duck Go has a free AI chatbot that has Llama 3.1 70B option. I use it all the time, but Venice also does images, right?

Yeah Venice has a few other things, but also the ability to upload and work with PDFs, text files, etc.

It’s worth it if you use LLMs often enough or in your workflow to have a dedicated LLM provider, imo. The 405B parameter model is pretty damn good. Also interested to try out llama3.2 which dropped like a day ago

Llama 3.2 is multi modal. Good stuff, I've been running a 90b-vision on deepinfra and it's good.

Install a local LLM software and use the biggest models through deepinfra with their API. It's a few cents a months only, highly recommended.

Oh damn will check this one out. Thanks for the info!

I’ve spent the last couple days digging into providers that I can use with work and deepinfra is incredible. It’s shocking how bad the data privacy terms of the OpenAI API is compared to deepinfra. At first I was confused why they don’t have as many models as OpenRouter, but I imagine a lot of it comes down to being able to maintain their terms of service that are user friendly. Thanks again for sharing!