🧵 REPLYING TO @rohanpaul_ai (Rohan Paul):

┌─ "The diminishing returns from pure model scaling is making many experts concerned.

The worry is simple, progress is slowing even with more chips and cash.

Earlier in March, a survey of 475 AI researchers concluded that AGI was a "very unlikely" outcome of the current development approach of simply making LLMs bigger.

---

futurism. com/scientists-worried-ai-pleateau

https://file.nostrmedia.com/f/72f3e1dddf861f724341704ebcb9a8b2e3319dd751a2d4f8886b261d0a4ef3c8/b899ea9755fb410a28b1d80374c31cebad7f09e003f6c280e2fa5ffd63763791.jpg"

└─────────────────────────────────

💬 ELON'S REPLY:

@rohanpaul_ai False, intelligence still scales logarithmically with compute.

And it doesn’t make sense to call them LLMs when they’re natively multimodal.

Just models – it’s cleaner.

Source:

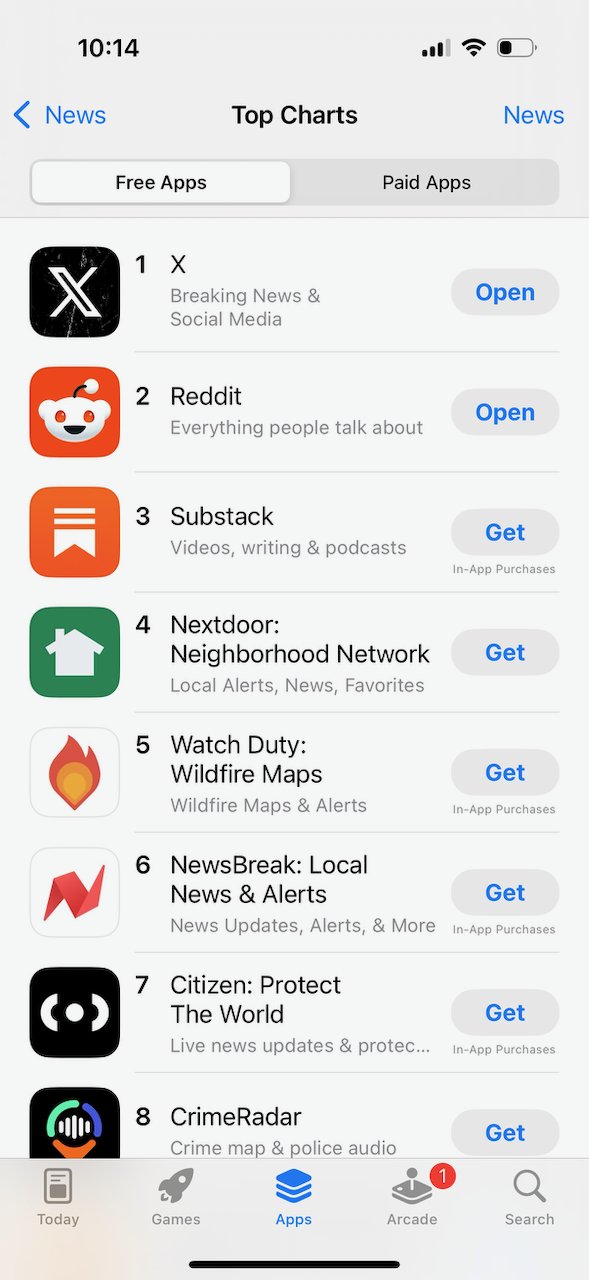

X (formerly Twitter)

Elon Musk (@elonmusk) on X

@rohanpaul_ai False, intelligence still scales logarithmically with compute.

And it doesn’t make sense to call them LLMs when they’re natively...