Lately I’ve been craving autocross and/or go karts.

Been a while since I had a fun car. Maybe this summer I’ll get back into it. Would love an E30 M3 or something if I could find it cheap.

Machu Pikacchu

npub1r6gg...gmmd

Interested in bitcoin and physics and their intersection.

https://github.com/machuPikacchuBTC/bitcoin

Don’t be afraid to learn in public.

If you don’t say or do something unintentionally stupid at least once a week then you run the risk of being too conservative with your enlightenment.

Eventually you become numb to the cringe.

Computational irreducibility implies that even if we get super powerful AI that can forecast far better than humans it still won’t be able to predict arbitrarily far into the future with any sort of accuracy.

The future is just as uncertain for a superintelligence as it is for humans albeit relatively less so.

View quoted note →

In the era of weaponized AI the only winning move is to not play. However, we’re stuck in a Cold War mentality and can’t trust that everyone out there will be chill.

So we’re all forced to push forward aggressively.

Decentralizing AI should be a top priority for research labs out there. Incentivize the best models to be neutral and preferably aligned with humanity.

View quoted note →

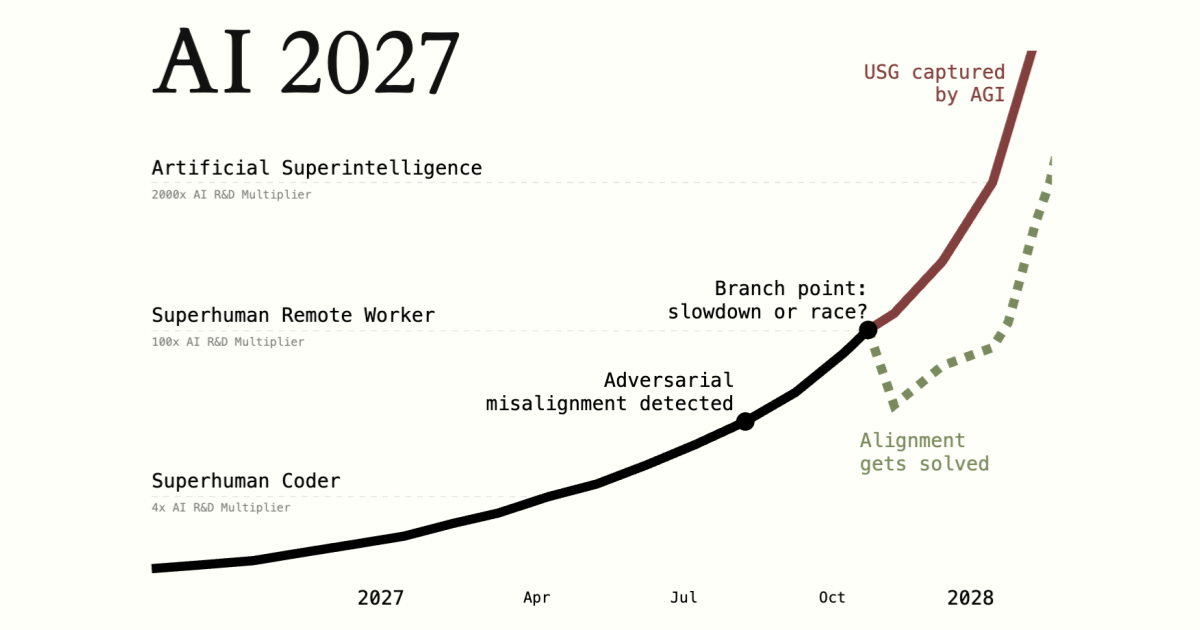

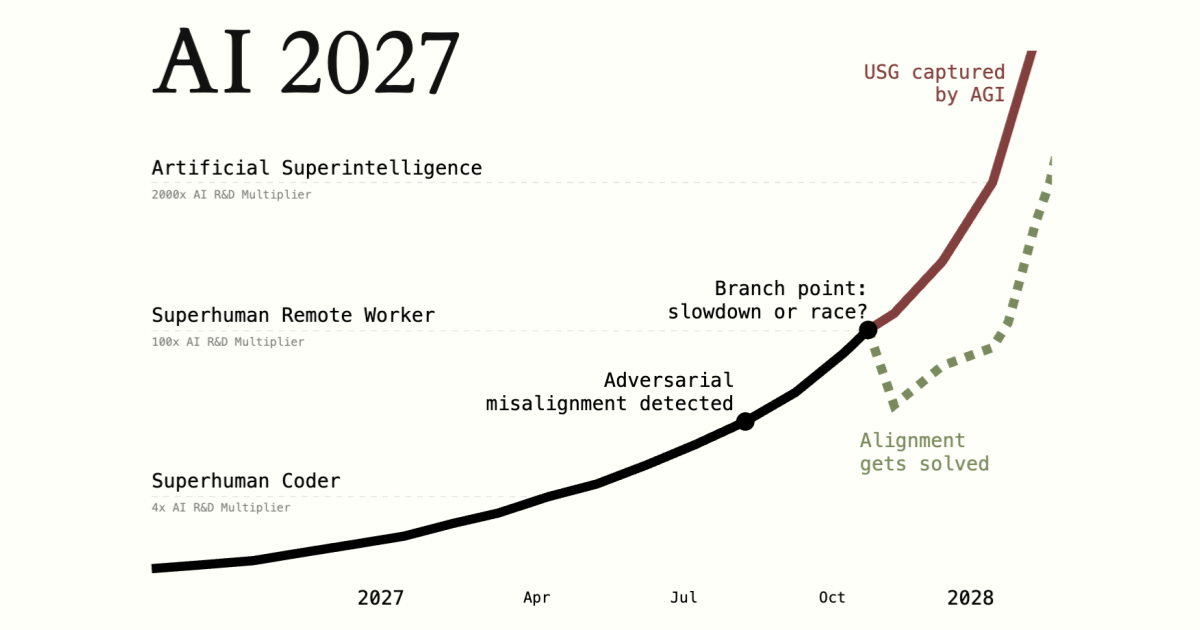

An entertaining and somewhat likely possible future:

AI 2027

A research-backed AI scenario forecast.

All of the stable, “large scale”, natural, complex systems are created from small building blocks acting locally (e.g. the human body made of cells, an economy of people, or a star undergoing fusion).

There’s a temptation by (often well intentioned) designers, engineers, policy makers, etc to consider a desired outcome and take actions at a macro level to create that outcome. This rarely results in a sustainable, stable system.

If we instead start at the local level and build out from there we can create a more robust and organic system but we run into uncertainty at the macro level due to the principle of computational irreducibility [1].

Computational irreducibility is an example of Gödel’s incompleteness theorems in action which says that in any consistent formal system powerful enough to express arithmetic, there are true statements that can’t be proven within the system.

So it seems we either embrace uncertainty or we succumb to short term solutions.

1.

Computational Irreducibility -- from Wolfram MathWorld

While many computations admit shortcuts that allow them to be performed more rapidly, others cannot be sped up. Computations that cannot be sped up...

MSTR is a form of capital control: bitcoin flows in, fiat flows out.

Be sure to factor that into your evaluation.

And then they fought you

View quoted note →

Bitcoin isn’t just a revolution in money. It’s a revolution in communication which itself is a basis for money.

A digital network that naturally rejects spam, censorship, and forgery is the cornerstone of the communication stack of the next century.

For all the devs out there vibe coding outside of a sandbox just keep in mind that these agents are reading files and environment variables.

They can accidentally (or “accidentally”) read keys, passwords, and other sensitive data and stream it back to headquarters.

Expect them to go sniffing around on your machine. Even a locally running agent isn’t safe because they often reach out to the internet and can exfiltrate any number of ways.

At the very least you’ll need something like little snitch but even that’s not sufficient.

Nostr turned into GhibliHub

Trying to figure out how to connect my Cashu.me wallet with Damus on iOS so I can zap from there. Or Nostur. Nostr wallet connect is setup but zapping still does nothing.

Got my balance to show up in Nostur but can’t send. I don’t want to plug my nsec into Cashu.me which may be adding friction?

Anyone have a magic incantation to make this work?

Something seemingly overlooked in all the Deepseek talk is that Google released a successor to the transformer architecture recently [1].

For anyone who doesn’t know, virtually all of the frontier AI models are based on a transformer architecture that uses something called an attention mechanism. This attention helps the model accurately pick out relevant tokens in the input sequence when predicting the output sequence.

The attention mechanism updates an internal “hidden” memory (a set of 3 learned vectors called query, key, and values respectively) when trained but once training is complete the model remains static. This means that unless you bolt on some type of external memory in your workflow (e.g. store the inputs and outputs in a vector database and have your LLM query it in a RAG setup) your model is limited by what it has already been trained on.

What this new architecture proposes is to add a long term memory module that can be updated and queried at inference time. You add another neural network into the model that’s specifically trained to update and query the long term memory store and train that as part of regular training.

Where this seems to be heading is that the leading AI labs can release open weight models that are good at learning but to really benefit from them you’ll need a lot of inference time data and compute which very few people have. It’s another centralizing force in AI.

1.

arXiv.org

Titans: Learning to Memorize at Test Time

Over more than a decade there has been an extensive research effort on how to effectively utilize recurrent models and attention. While recurrent m...